I'm heading into hospital for an operation on my neck later today and recuperation may take a while. Hence this blog is not going to be updated for quite a few weeks.

Meanwhile, while I'm away, please feel free to add comments on the kind of kernel/system/BIOS related issues you're interested in to help me focus blog entries in 2010...

UPDATE:

Operation was a success - now got a titanium disc in my neck and I've regained sense of touch in feet and hands. I can now walk again without the help of a walking stick. I'm keeping my keyboarding/desk activity to absolute minimum. Need to do a whole load of exercises to get neck more mobile and my immune system is working overtime which makes me really physically tired.

Thursday, 26 November 2009

Tuesday, 24 November 2009

itop - look at top interrupt activity

When I want to see interrupt activity on a Linux box I normally use the following rune:

..which simply dumps out the interrupt information every second. Another way is to use itop, which has the benefit that it outputs the interrupt rate which is lacking from my rune above.

To install, use:

sudo apt-get install itop

and to run, use:

The output is refreshed every second, and outputs something like the following:

INT NAME RATE MAX

0 [PIC-edge time] 154 Ints/s (max: 154)

14 [PIC-edge ata_] 16 Ints/s (max: 16)

18 [PIC-fasteoi ehci] 4 Ints/s (max: 4)

22 [PIC-fasteoi ohci] 2 Ints/s (max: 2)

29 [MSI-edge i915] 38 Ints/s (max: 38)

30 [MSI-edge iwl3] 6 Ints/s (max: 6)

Unfortunately itop does truncate the interrupt names, but I'm not so worried about this - I generally want to see very quickly if a machine is suffering from interrupt saturation or is missing interrupts, which I can get from itop easily.

To see all interrupts, run itop with:

itop -a

And to run for a number of iterations, run with the -n flag, e.g.

watch -n 1 cat /proc/interrupts

..which simply dumps out the interrupt information every second. Another way is to use itop, which has the benefit that it outputs the interrupt rate which is lacking from my rune above.

To install, use:

sudo apt-get install itop

and to run, use:

itop

The output is refreshed every second, and outputs something like the following:

INT NAME RATE MAX

0 [PIC-edge time] 154 Ints/s (max: 154)

14 [PIC-edge ata_] 16 Ints/s (max: 16)

18 [PIC-fasteoi ehci] 4 Ints/s (max: 4)

22 [PIC-fasteoi ohci] 2 Ints/s (max: 2)

29 [MSI-edge i915] 38 Ints/s (max: 38)

30 [MSI-edge iwl3] 6 Ints/s (max: 6)

Unfortunately itop does truncate the interrupt names, but I'm not so worried about this - I generally want to see very quickly if a machine is suffering from interrupt saturation or is missing interrupts, which I can get from itop easily.

To see all interrupts, run itop with:

itop -a

And to run for a number of iterations, run with the -n flag, e.g.

itop -n 10

Kernel Early Printk Messages

I've been messing around with the earlyprintk kernel options to allow me to get some form of debug out before the console drivers start later on in the kernel init phase. The earlyprintk kernel option supports debug output via the VGA, serial port and USB debug port.

The USB debug port is of interest - most modern systems seem to provide a debug port capability which allows one to send debug over USB to another machine. To check if your USB controller has this capability, use:

sudo lspci -vvv | grep "Debug port"

and look for a string such as "Capabilities: [58] Debug port: BAR=1 offset=00a0". You may have more than one of these on your system, so beware you use the correct one.

One selects this mode of earlyprintk debugging using:

for the default first port, or select the Nth debug enabled port using:

One also needs to build a kernel with the following config option enabled:

On my debug set-up I used a NET20DC-USB Hi-Speed USB 2.0 Host-to-Host Debug Device connecting the target machine and a host with which I capture the USB debug using /dev/ttyUSB0 with minicom. So that I won't bore you with the details, this is all explained in the kernel documentation in Documentation/x86/earlyprintk.txt

As it was, I needed to tweak the earlyprintk driver to put in some delays in the EHCI probing and reset code to get it working on my fairly fast target laptop.

My experience with this approach wasn't great - I had to plug/unplug the debug device quite frequently for the earlyprintk EHCI reset and probe to work. Also, the EHCI USB driver initialisation later on in the kernel initialisation hung which wasn't useful.

Overall, I may have had problems with the host/target and/or the NET20DC-USB host-to-host device, but it did allow me to get some debug out, be it rather unreliably.

Probably an easier way to get debug out is just using the boot option:

however this has the problem that the messages are eventually overwritten by the real console.

Finally, for anyone with old legacy serial ports on their machine (which is quite unlikely nowadays with newer hardware), one can use:

earlyprintk=serial,ttySn,baudrate

where ttySn is the nth tty serial port.

One can also append the ",keep" option to not disable the earlyprintk once the real console is up and running.

So, with earlyprintk, there is some chance of being able to get some form of debug out to a device to allow one to debug kernel problems that occur early in the initialisation phase.

The USB debug port is of interest - most modern systems seem to provide a debug port capability which allows one to send debug over USB to another machine. To check if your USB controller has this capability, use:

sudo lspci -vvv | grep "Debug port"

and look for a string such as "Capabilities: [58] Debug port: BAR=1 offset=00a0". You may have more than one of these on your system, so beware you use the correct one.

One selects this mode of earlyprintk debugging using:

earlyprintk=dbgp

for the default first port, or select the Nth debug enabled port using:

earlyprintk=dbgpN

One also needs to build a kernel with the following config option enabled:

CONFIG_EARLY_PRINTK_DBGP=y

On my debug set-up I used a NET20DC-USB Hi-Speed USB 2.0 Host-to-Host Debug Device connecting the target machine and a host with which I capture the USB debug using /dev/ttyUSB0 with minicom. So that I won't bore you with the details, this is all explained in the kernel documentation in Documentation/x86/earlyprintk.txt

As it was, I needed to tweak the earlyprintk driver to put in some delays in the EHCI probing and reset code to get it working on my fairly fast target laptop.

My experience with this approach wasn't great - I had to plug/unplug the debug device quite frequently for the earlyprintk EHCI reset and probe to work. Also, the EHCI USB driver initialisation later on in the kernel initialisation hung which wasn't useful.

Overall, I may have had problems with the host/target and/or the NET20DC-USB host-to-host device, but it did allow me to get some debug out, be it rather unreliably.

Probably an easier way to get debug out is just using the boot option:

earlyprintk=vga

however this has the problem that the messages are eventually overwritten by the real console.

Finally, for anyone with old legacy serial ports on their machine (which is quite unlikely nowadays with newer hardware), one can use:

earlyprintk=serial,ttySn,baudrate

where ttySn is the nth tty serial port.

One can also append the ",keep" option to not disable the earlyprintk once the real console is up and running.

So, with earlyprintk, there is some chance of being able to get some form of debug out to a device to allow one to debug kernel problems that occur early in the initialisation phase.

Sunday, 22 November 2009

Results from the "Linux needs to have..." poll

Before I kick off another poll, here are the totally unscientific results of my "Linux needs to have..." poll:

| Working Audio | 16 |

| Reliable suspend/resume | 11 |

| Better video support | 10 |

| Better battery life | 9 |

| Better wifi | 8 |

| Faster boot times | 6 |

| Nothing more - it rocks | 2 |

It's based on a very small sample (even though we get thousands of hits a month), so I'd hate to draw many conclusions from the votes. However, it looks like audio is giving a lot of users grief and this needs fixing. Making Suspend/Resume better does not surprise me as I see a lot of weird ACPI and driver suspend/resume issues on the hardware I work with. It's interesting to see that faster boot times are low on the wish list, but I does not surprise me that video and wifi support are higher priority (since they need to work!) compared to getting to boot faster.

And to finish with, not many users think Linux does not need improving - this spurs us on to get subsystems fixed to make it rock!

Friday, 20 November 2009

Debugging grub2 with gdb

Ľubomír Rintel has written a detailed and very helpful debugging guide for grub2. The guide covers how to debug with gdb using emulators such as QEMU and Bochs as well as traditional serial line debugging using a null-modem.

The tricky part is to be able to pull in the debug and symbol files for dynamically loaded modules, however this has been solved by Ľubomír with a gdb and perl script.

The guide gives some useful tricks which can be used not just with grub2 but other boot loaders too. It's well worth a look just to learn some useful gdb hacks.

The tricky part is to be able to pull in the debug and symbol files for dynamically loaded modules, however this has been solved by Ľubomír with a gdb and perl script.

The guide gives some useful tricks which can be used not just with grub2 but other boot loaders too. It's well worth a look just to learn some useful gdb hacks.

Thursday, 19 November 2009

Chumby One - Cute and affordable fun.

A little while ago I blogged about the Chumby, a fine little ARM+Linux based internet device made by Chumby Industries.

A little while ago I blogged about the Chumby, a fine little ARM+Linux based internet device made by Chumby Industries.Well, now they have gone one step futher and produced the Chumby One (see right), which currently retails for $99 for pre-orders. This new version comes with some extra features compared to the original: an FM radio, support for a rechargeable lithium ion battery an easier to use volume dial.

There is a great blog article about design rationale behind this new product on Bunnies Blog including a video of the production line and some photos of the motherboard.

There is a great blog article about design rationale behind this new product on Bunnies Blog including a video of the production line and some photos of the motherboard.I'm quite a fan of the Chumby Classic, but it's great to see a far more affordable little brother being added to the product range! I wish Chumby Industries every success with the Chumby One.

Debugging with QEMU and gdb

QEMU is one very powerful tool - and combined with gdb this has allowed me to debug Intel based boot loaders. Here is a quick run down of the way I drive this:

Firstly, I recommend removing KVM as this has caused me some grief catching breakpoints. This means QEMU will run slower, but I want to remove any kind of grief I can to simplify my debugging environment.

Start QEMU and use the -s -S options to enable gdb debugging and halt the CPU to wait for gdb to attach:

$ qemu -s -S -bios bios-efi.bin -m 1024 karmic-efi-qcow2.img -serial stdio

..then in another terminal, attach gdb:

$ gdb

GNU gdb (GDB) 7.0-ubuntu

Copyright (C) 2009 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law. Type "show copying"

and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

For bug reporting instructions, please see:

(gdb) target remote localhost:1234

Remote debugging using localhost:1234

0x0000fff0 in ?? ()

(gdb)

..and start the boot process..

(gdb) c

..and get debugging!

Firstly, I recommend removing KVM as this has caused me some grief catching breakpoints. This means QEMU will run slower, but I want to remove any kind of grief I can to simplify my debugging environment.

Start QEMU and use the -s -S options to enable gdb debugging and halt the CPU to wait for gdb to attach:

$ qemu -s -S -bios bios-efi.bin -m 1024 karmic-efi-qcow2.img -serial stdio

..then in another terminal, attach gdb:

$ gdb

GNU gdb (GDB) 7.0-ubuntu

Copyright (C) 2009 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law. Type "show copying"

and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

For bug reporting instructions, please see:

(gdb) target remote localhost:1234

Remote debugging using localhost:1234

0x0000fff0 in ?? ()

(gdb)

..and start the boot process..

(gdb) c

..and get debugging!

Wednesday, 18 November 2009

Bash file truncation

Today I wanted to truncate a file from bash and discovered that using the dummy placeholder : worked a treat:

:>example-file-to-truncate.txt

..I've been using bash for years and completely overlooked this gem - I should have devoted more effort into reading the POSIX 1003.2 standard or the bash manual a long while ago...

:>example-file-to-truncate.txt

..I've been using bash for years and completely overlooked this gem - I should have devoted more effort into reading the POSIX 1003.2 standard or the bash manual a long while ago...

Saturday, 14 November 2009

Booting Ubuntu Karmic with grub2 and EFI

The past few days I've been tinkering with the TianoCore EFI and trying to get Ubuntu Karmic to boot with the EFI enabled grub2. To make debugging easier, I did all this inside a virtualised QEMU environment. A lot of this earlier hackery was blogged about in a previous article.

In trying to get this to work I hit a couple of issues; figuring out workarounds took some thought and time. Some of it is still "work in progress", but at least now I know what needs to be fixed.

Below is a video (grabbed using the recordmydesktop tool) showing Ubuntu Karmic booting:

First you see the TianoCore EFI starting and then loading grub2 - this then loads a very slimmed down grub.cfg and eventually boots the system.

I hit several issues; firstly the kernel oops'd when trying to call virt_efi_get_next_variable() - for some reason calling this EFI runtime service support function in the EFI is causing the kernel to oops. Other EFI support functions seem to work correctly, so my current belief is that the bug is not in the kernel EFI driver, but this needs a little more poking around to verify this assumption.

Second issue is that loading some grub2 modules and using some fundamental grub2 commands such as 'set' cause the EFI get caught in a loop. It took me a while to corner this - eventually I cut down the default grub config file down to a really minimal version which could at least boot the kernel! Anyhow, this grub2 issue is first on my list of bugs to fix.

Debugging these issues is a little tricky, but using QEMU helps as I can dump out debug from grub2 over a pseudo serial port which I can capture and view in real-time. Secondly, debugging the kernel using a serial console in QEMU (using the console=ttyS0 boot option) allows me to capture all kernel boot messages (including the offending kernel oops).

I still need to look into ACPI support and how to get the video initialised to get more than bog-standard VGA resolution, but these are currently lower priorities.

Without QEMU and Tristan Gingold's port of the TianoCore EFI to QEMU, this work would have been much harder.

In trying to get this to work I hit a couple of issues; figuring out workarounds took some thought and time. Some of it is still "work in progress", but at least now I know what needs to be fixed.

Below is a video (grabbed using the recordmydesktop tool) showing Ubuntu Karmic booting:

First you see the TianoCore EFI starting and then loading grub2 - this then loads a very slimmed down grub.cfg and eventually boots the system.

I hit several issues; firstly the kernel oops'd when trying to call virt_efi_get_next_variable() - for some reason calling this EFI runtime service support function in the EFI is causing the kernel to oops. Other EFI support functions seem to work correctly, so my current belief is that the bug is not in the kernel EFI driver, but this needs a little more poking around to verify this assumption.

Second issue is that loading some grub2 modules and using some fundamental grub2 commands such as 'set' cause the EFI get caught in a loop. It took me a while to corner this - eventually I cut down the default grub config file down to a really minimal version which could at least boot the kernel! Anyhow, this grub2 issue is first on my list of bugs to fix.

Debugging these issues is a little tricky, but using QEMU helps as I can dump out debug from grub2 over a pseudo serial port which I can capture and view in real-time. Secondly, debugging the kernel using a serial console in QEMU (using the console=ttyS0 boot option) allows me to capture all kernel boot messages (including the offending kernel oops).

I still need to look into ACPI support and how to get the video initialised to get more than bog-standard VGA resolution, but these are currently lower priorities.

Without QEMU and Tristan Gingold's port of the TianoCore EFI to QEMU, this work would have been much harder.

Wednesday, 11 November 2009

Improving battery life on the HP Mini

A few weeks ago I wrote about my experience using an HP Mini netbook - well in this follow up article describes how I reduced the power consumption on this device with some fairly basic steps.

I started from a fresh clean install of Ubuntu Karmic 9.10 (32 bit) and pulled in all the latest updates. I then installed powertop using:

apt-get install powertop

Then I unplugged the power and let powertop run for 10 minutes to settle in and then noted that ACPI power estimate was ~13.0W.

My first saving was to totally disable Bluetooth - wireless power savings are a good way of saving power. I don't use Bluetooth at all and to save memory from the driver being loaded I blacklisted the btusb module by adding btusb to /etc/modprobe.d/blacklist.conf (this is rather a heavy handed approach!). I then rebooted and re-measured the power consumption - down to 9.7W.

My next tweak was to enable laptop mode. To do this, edit /etc/default/acpi-support and set ENABLE_LAPTOP_MODE=true. Not sure if this is a big win for devices with SSD like my netbook, I believe one will see a bigger power saving with HDD based devices using this setting.

I noted that powertop was informing me to use usbcore.autosuspend=1 and disable hal polling, so I'd thought I'd follow it's wisdom and make these tweaks.

I edited /etc/default/grub and changed the GRUB_CMDLINE_LINUX_DEFAULT setting by adding usbcore.autosuspend=1, and then ran sudo update-grub to update /boot/grub/grub.cfg.

Next I ran:

sudo hal-disable-polling --device /dev/sdb

..for some reason /dev/sdb was being polled and I don't require this overhead for some auto sensing functionality.

I rebooted and remeasured the power consumption - down to 8.7W. Not bad.

Next I totally disabled compiz and turned my display brightness down - this saved 0.3W, bringing the the system down to a reasonable 8.4W. I'm not sure if the compiz savings were much, but if I can save the GPU work by turning off compositing then that must save some power.

My final tweak was to disable the Gnome terminal cursor from flashing - this saves 2 wakeups a second (not much!). To disable the cursor blink for the Default profile I ran gconf-editor and set /apps/gnome-terminal/profiles/Default/cursor_blink_mode to off. This saving is negligible, but makes me feel good to know I've saved two wakeups a second :-)

So now my machine was in a usable state, and down to 8.4W. I could tweak my access point to reduce the number of beacon intervals to save Wifi wakeups, but that's going a little too far, even for me! I then wondered much power Wifi was using, so I disabled it and after 15 minutes my system dropped down to 7.3W. This is good to know but a little pointless for my normal work pattern when I need net access - it is a netbook after all!

I'm sure I can save a little more power, but for now it's a good win for a little amount of work. I'm open to any suggestions on how to save more power. Please let me know!

I started from a fresh clean install of Ubuntu Karmic 9.10 (32 bit) and pulled in all the latest updates. I then installed powertop using:

apt-get install powertop

Then I unplugged the power and let powertop run for 10 minutes to settle in and then noted that ACPI power estimate was ~13.0W.

My first saving was to totally disable Bluetooth - wireless power savings are a good way of saving power. I don't use Bluetooth at all and to save memory from the driver being loaded I blacklisted the btusb module by adding btusb to /etc/modprobe.d/blacklist.conf (this is rather a heavy handed approach!). I then rebooted and re-measured the power consumption - down to 9.7W.

My next tweak was to enable laptop mode. To do this, edit /etc/default/acpi-support and set ENABLE_LAPTOP_MODE=true. Not sure if this is a big win for devices with SSD like my netbook, I believe one will see a bigger power saving with HDD based devices using this setting.

I noted that powertop was informing me to use usbcore.autosuspend=1 and disable hal polling, so I'd thought I'd follow it's wisdom and make these tweaks.

I edited /etc/default/grub and changed the GRUB_CMDLINE_LINUX_DEFAULT setting by adding usbcore.autosuspend=1, and then ran sudo update-grub to update /boot/grub/grub.cfg.

Next I ran:

sudo hal-disable-polling --device /dev/sdb

..for some reason /dev/sdb was being polled and I don't require this overhead for some auto sensing functionality.

I rebooted and remeasured the power consumption - down to 8.7W. Not bad.

Next I totally disabled compiz and turned my display brightness down - this saved 0.3W, bringing the the system down to a reasonable 8.4W. I'm not sure if the compiz savings were much, but if I can save the GPU work by turning off compositing then that must save some power.

My final tweak was to disable the Gnome terminal cursor from flashing - this saves 2 wakeups a second (not much!). To disable the cursor blink for the Default profile I ran gconf-editor and set /apps/gnome-terminal/profiles/Default/cursor_blink_mode to off. This saving is negligible, but makes me feel good to know I've saved two wakeups a second :-)

So now my machine was in a usable state, and down to 8.4W. I could tweak my access point to reduce the number of beacon intervals to save Wifi wakeups, but that's going a little too far, even for me! I then wondered much power Wifi was using, so I disabled it and after 15 minutes my system dropped down to 7.3W. This is good to know but a little pointless for my normal work pattern when I need net access - it is a netbook after all!

I'm sure I can save a little more power, but for now it's a good win for a little amount of work. I'm open to any suggestions on how to save more power. Please let me know!

Tuesday, 10 November 2009

Audio Weirdnesses

Yesterday I got poking around a perplexing audio recording issue; quite bizarrely stereo (2 channel) audio from the internal microphone would record perfectly, but mono (1 channel) would not. After working my way down through the driver and though ALSA I got to the point where I could not see how there could be any driver problem, so the issue had to be with the ALSA user space library, or the hardware (or both!).

My colleague spotted an interesting characteristic - using alsamixer to set the capture level to 100% left channel and 0% right channel or vice-versa made the mono recording work. Further investigation by using stereo recording of a pure sine wave (whistling into the microphone!) showed that the left channel was the complete reverse of the right channel. Then the penny dropped - the mono recording was essentially ALSA summing left and right channels, causing a near perfect zero recording since the left channel was the inverse of the right channel. Doh.

As it is, this very same issue has been discussed on LKML here with a suggested workaround described by Takashi Iwai here as follows:

Put the below to ~/.asoundrc

arecord -Dimix -c1 test.wav

Urgh. Well, at least there is some kind of ALSA workaround.

So the moral of the story is to twiddle with left and right channel levels and do some stereo recording and look at the results before hacking through the driver. I should have applied Occams' razor - I assumed the complex part (the driver) was the problem.

My colleague spotted an interesting characteristic - using alsamixer to set the capture level to 100% left channel and 0% right channel or vice-versa made the mono recording work. Further investigation by using stereo recording of a pure sine wave (whistling into the microphone!) showed that the left channel was the complete reverse of the right channel. Then the penny dropped - the mono recording was essentially ALSA summing left and right channels, causing a near perfect zero recording since the left channel was the inverse of the right channel. Doh.

As it is, this very same issue has been discussed on LKML here with a suggested workaround described by Takashi Iwai here as follows:

Put the below to ~/.asoundrc

And record using:pcm.imix {

type plug

slave.pcm "hw"

ttable.0.0 0.5

ttable.0.1 -0.5

}

arecord -Dimix -c1 test.wav

Urgh. Well, at least there is some kind of ALSA workaround.

So the moral of the story is to twiddle with left and right channel levels and do some stereo recording and look at the results before hacking through the driver. I should have applied Occams' razor - I assumed the complex part (the driver) was the problem.

Friday, 6 November 2009

QEMU and EFI BIOS hackery

Earlier this week I blogged about QEMU and EFI BIOS. Trying to debug a problem with grub2-efi-ia32 has given me a few little headaches but I'm finding ways to work around them all.

The first issue is getting a system installed with an EFI BIOS. My quick hack was to create a 4GB QEMU qcow2 disk image and then inside this create a small EFI FAT12 boot partition using fdisk - (partition type 0xef in the 1st primary partition). I then installed Ubuntu Karmic Desktop with ext4 and swap in primary partitions 2 and 3 by booting with the conventional BIOS. I then installed grub2-efi-ia32 in the EFI boot partition and then booted QEMU using the TianoCore EFI BIOS that has been ported to QEMU.

One problem is that the EFI BIOS does not scroll the screen, hence all output when it reaches the end of the screen just keeps over writing the last line, making debugging with printf() style prints nearly impossible. Then I found that the BIOS emits characters over a serial port, which QEMU can emulate. Unfortunately, the output contains VT control characters to do cursor positioning and pretty console colours, which makes reading the output a little painful. So I hacked up a simple tool to take the output from QEMU and strip out the VT control chars to make the text easier to read.

Now QEMU boot line is as follows:

qemu -bios bios-efi.bin -m 1024 karmic-efi-qcow2.img -serial stdio 2>&1 | ./parse-output

..and this dumps the output from the BIOS and grub2 to stdout in a more readable form.

The parse-output tool is a little hacky - but does the job. For reference, I've put it in my debug repository here.

Grub2 allows one to enable some level of debugging output by issuing the following command:

set debug=all

..which gives me some idea of what's working or broken at a fairly low enough level before I start attaching gdb. Fortunately debugging using gdb has been fairly well documented here - I just now need to shoe-horn in small patch to allow me to attach gdb to grub2 from outside QEMU - but that's for another debug session...

The first issue is getting a system installed with an EFI BIOS. My quick hack was to create a 4GB QEMU qcow2 disk image and then inside this create a small EFI FAT12 boot partition using fdisk - (partition type 0xef in the 1st primary partition). I then installed Ubuntu Karmic Desktop with ext4 and swap in primary partitions 2 and 3 by booting with the conventional BIOS. I then installed grub2-efi-ia32 in the EFI boot partition and then booted QEMU using the TianoCore EFI BIOS that has been ported to QEMU.

One problem is that the EFI BIOS does not scroll the screen, hence all output when it reaches the end of the screen just keeps over writing the last line, making debugging with printf() style prints nearly impossible. Then I found that the BIOS emits characters over a serial port, which QEMU can emulate. Unfortunately, the output contains VT control characters to do cursor positioning and pretty console colours, which makes reading the output a little painful. So I hacked up a simple tool to take the output from QEMU and strip out the VT control chars to make the text easier to read.

Now QEMU boot line is as follows:

qemu -bios bios-efi.bin -m 1024 karmic-efi-qcow2.img -serial stdio 2>&1 | ./parse-output

..and this dumps the output from the BIOS and grub2 to stdout in a more readable form.

The parse-output tool is a little hacky - but does the job. For reference, I've put it in my debug repository here.

Grub2 allows one to enable some level of debugging output by issuing the following command:

set debug=all

..which gives me some idea of what's working or broken at a fairly low enough level before I start attaching gdb. Fortunately debugging using gdb has been fairly well documented here - I just now need to shoe-horn in small patch to allow me to attach gdb to grub2 from outside QEMU - but that's for another debug session...

Performance Monitoring using Ingo Molnars' perf tool

The perf tool by Ingo Molnar allows one to do some deep performance using Linux performance counters. It covers a broad range of performance monitoring at the hardware level and software level. In this blog posting I just want to give you a taste of some of the ways to use this powerful tool.

One needs to build this from the kernel source, but it's fairly easy to do:

1) Install libelf-dev, on a Ubuntu system use:

sudo apt-get install libelf-dev

2) Get the kernel source

either from kernel.org or from Ubuntu kernel source package:

apt-get source linux-image-2.6.31-14-generic

3) ..and build the tool..

in the kernel source:

cd tools/perf

make

There is plenty of documentation on this tool in the tools/perf/Documentation directory and I recommend reading this to get a full appreciation of what the tool can do and how to drive it.

My first example is a trivial performance counter example on the dd command:

./perf stat dd if=/dev/zero of=/dev/null bs=1M count=4096

4096+0 records in

4096+0 records out

4294967296 bytes (4.3 GB) copied, 0.353498 s, 12.1 GB/s

Performance counter stats for 'dd if=/dev/zero of=/dev/null bs=1M count=4096':

355.148424 task-clock-msecs # 0.998 CPUs

18 context-switches # 0.000 M/sec

0 CPU-migrations # 0.000 M/sec

501 page-faults # 0.001 M/sec

899141721 cycles # 2531.735 M/sec

2212730050 instructions # 2.461 IPC

67433134 cache-references # 189.873 M/sec

6374 cache-misses # 0.018 M/sec

0.355829317 seconds time elapsed

But we can dig deeper than this. How about seeing what's really going on on the application and the kernel? The next command records stats into a file perf.data and then we can then examine these stats using perf report:

./perf record -f dd if=/dev/urandom of=/dev/null bs=1M count=16

16+0 records in

16+0 records out

16777216 bytes (17 MB) copied, 2.39751 s, 7.0 MB/s

[ perf record: Captured and wrote 1.417 MB perf.data (~61900 samples) ]

..and generate a report on the significant CPU consuming functions:

./perf report --sort comm,dso,symbol | grep -v "0.00%"

# Samples: 61859

#

# Overhead Command Shared Object Symbol

# ........ ....... ......................... ......

#

75.52% dd [kernel] [k] sha_transform

14.07% dd [kernel] [k] mix_pool_bytes_extract

3.38% dd [kernel] [k] extract_buf

2.33% dd [kernel] [k] copy_user_generic_string

1.36% dd [kernel] [k] __ticket_spin_lock

0.90% dd [kernel] [k] _spin_lock_irqsave

0.72% dd [kernel] [k] _spin_unlock_irqrestore

0.67% dd [kernel] [k] extract_entropy_user

0.27% dd [kernel] [k] default_spin_lock_flags

0.22% dd [kernel] [k] sha_init

0.11% dd [kernel] [k] __ticket_spin_unlock

0.08% dd [kernel] [k] copy_to_user

0.04% perf [kernel] [k] copy_user_generic_string

0.02% dd [kernel] [k] clear_page_c

0.01% perf [kernel] [k] memset_c

0.01% dd [kernel] [k] page_fault

0.01% dd /lib/libc-2.10.1.so [.] 0x000000000773f6

0.01% perf [kernel] [k] __ticket_spin_lock

0.01% dd [kernel] [k] native_read_tsc

0.01% dd /lib/libc-2.10.1.so [.] strcmp

0.01% perf [kernel] [k] kmem_cache_alloc

0.01% perf [kernel] [k] __block_commit_write

0.01% perf [kernel] [k] ext4_do_update_inode

..showing us where most of the CPU time is being consumed, down to the function names in the kernel, application and shared libraries.

One can drill down deeper, in the previous example strcmp() was using 0.01% of the CPU; we can see where using perf annotate:

./perf annotate strcmp

objdump: 'vmlinux': No such file

------------------------------------------------

Percent | Source code & Disassembly of vmlinux

------------------------------------------------

------------------------------------------------

Percent | Source code & Disassembly of libc-2.10.1.so

------------------------------------------------

:

:

:

: Disassembly of section .text:

:

: 000000000007ee20:

50.00 : 7ee20: 8a 07 mov (%rdi),%al

0.00 : 7ee22: 3a 06 cmp (%rsi),%al

25.00 : 7ee24: 75 0d jne 7ee33

25.00 : 7ee26: 48 ff c7 inc %rdi

0.00 : 7ee29: 48 ff c6 inc %rsi

0.00 : 7ee2c: 84 c0 test %al,%al

0.00 : 7ee2e: 75 f0 jne 7ee20

0.00 : 7ee30: 31 c0 xor %eax,%eax

0.00 : 7ee32: c3 retq

0.00 : 7ee33: b8 01 00 00 00 mov $0x1,%eax

0.00 : 7ee38: b9 ff ff ff ff mov $0xffffffff,%ecx

0.00 : 7ee3d: 0f 42 c1 cmovb %ecx,%eax

0.00 : 7ee40: c3 retq

Without the debug info in the object code, just the annotated assember is displayed.

To see which events one can trace with, use the perf list command:

./perf list

List of pre-defined events (to be used in -e):

cpu-cycles OR cycles [Hardware event]

instructions [Hardware event]

cache-references [Hardware event]

cache-misses [Hardware event]

branch-instructions OR branches [Hardware event]

branch-misses [Hardware event]

bus-cycles [Hardware event]

cpu-clock [Software event]

task-clock [Software event]

page-faults OR faults [Software event]

minor-faults [Software event]

major-faults [Software event]

context-switches OR cs [Software event]

cpu-migrations OR migrations [Software event]

L1-dcache-loads [Hardware cache event]

L1-dcache-load-misses [Hardware cache event]

...

...

sched:sched_migrate_task [Tracepoint event]

sched:sched_process_free [Tracepoint event]

sched:sched_process_exit [Tracepoint event]

sched:sched_process_wait [Tracepoint event]

sched:sched_process_fork [Tracepoint event]

sched:sched_signal_send [Tracepoint event]

On my system I have over 120 different types of events that can be monitored. One can select events to monitor using the -e event option, e.g.:

./perf stat -e L1-dcache-loads -e instructions dd if=/dev/zero of=/dev/null bs=1M count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.000784806 s, 1.3 GB/s

Performance counter stats for 'dd if=/dev/zero of=/dev/null bs=1M count=1':

1166059 L1-dcache-loads # inf M/sec

4283970 instructions # inf IPC

0.003090599 seconds time elapsed

This is one powerful too! I recommend reading the documentation and trying it out for yourself on a 2.6.31 kernel.

References: http://lkml.org/lkml/2009/8/4/346

One needs to build this from the kernel source, but it's fairly easy to do:

1) Install libelf-dev, on a Ubuntu system use:

sudo apt-get install libelf-dev

2) Get the kernel source

either from kernel.org or from Ubuntu kernel source package:

apt-get source linux-image-2.6.31-14-generic

3) ..and build the tool..

in the kernel source:

cd tools/perf

make

There is plenty of documentation on this tool in the tools/perf/Documentation directory and I recommend reading this to get a full appreciation of what the tool can do and how to drive it.

My first example is a trivial performance counter example on the dd command:

./perf stat dd if=/dev/zero of=/dev/null bs=1M count=4096

4096+0 records in

4096+0 records out

4294967296 bytes (4.3 GB) copied, 0.353498 s, 12.1 GB/s

Performance counter stats for 'dd if=/dev/zero of=/dev/null bs=1M count=4096':

355.148424 task-clock-msecs # 0.998 CPUs

18 context-switches # 0.000 M/sec

0 CPU-migrations # 0.000 M/sec

501 page-faults # 0.001 M/sec

899141721 cycles # 2531.735 M/sec

2212730050 instructions # 2.461 IPC

67433134 cache-references # 189.873 M/sec

6374 cache-misses # 0.018 M/sec

0.355829317 seconds time elapsed

But we can dig deeper than this. How about seeing what's really going on on the application and the kernel? The next command records stats into a file perf.data and then we can then examine these stats using perf report:

./perf record -f dd if=/dev/urandom of=/dev/null bs=1M count=16

16+0 records in

16+0 records out

16777216 bytes (17 MB) copied, 2.39751 s, 7.0 MB/s

[ perf record: Captured and wrote 1.417 MB perf.data (~61900 samples) ]

..and generate a report on the significant CPU consuming functions:

./perf report --sort comm,dso,symbol | grep -v "0.00%"

# Samples: 61859

#

# Overhead Command Shared Object Symbol

# ........ ....... ......................... ......

#

75.52% dd [kernel] [k] sha_transform

14.07% dd [kernel] [k] mix_pool_bytes_extract

3.38% dd [kernel] [k] extract_buf

2.33% dd [kernel] [k] copy_user_generic_string

1.36% dd [kernel] [k] __ticket_spin_lock

0.90% dd [kernel] [k] _spin_lock_irqsave

0.72% dd [kernel] [k] _spin_unlock_irqrestore

0.67% dd [kernel] [k] extract_entropy_user

0.27% dd [kernel] [k] default_spin_lock_flags

0.22% dd [kernel] [k] sha_init

0.11% dd [kernel] [k] __ticket_spin_unlock

0.08% dd [kernel] [k] copy_to_user

0.04% perf [kernel] [k] copy_user_generic_string

0.02% dd [kernel] [k] clear_page_c

0.01% perf [kernel] [k] memset_c

0.01% dd [kernel] [k] page_fault

0.01% dd /lib/libc-2.10.1.so [.] 0x000000000773f6

0.01% perf [kernel] [k] __ticket_spin_lock

0.01% dd [kernel] [k] native_read_tsc

0.01% dd /lib/libc-2.10.1.so [.] strcmp

0.01% perf [kernel] [k] kmem_cache_alloc

0.01% perf [kernel] [k] __block_commit_write

0.01% perf [kernel] [k] ext4_do_update_inode

..showing us where most of the CPU time is being consumed, down to the function names in the kernel, application and shared libraries.

One can drill down deeper, in the previous example strcmp() was using 0.01% of the CPU; we can see where using perf annotate:

./perf annotate strcmp

objdump: 'vmlinux': No such file

------------------------------------------------

Percent | Source code & Disassembly of vmlinux

------------------------------------------------

------------------------------------------------

Percent | Source code & Disassembly of libc-2.10.1.so

------------------------------------------------

:

:

:

: Disassembly of section .text:

:

: 000000000007ee20

50.00 : 7ee20: 8a 07 mov (%rdi),%al

0.00 : 7ee22: 3a 06 cmp (%rsi),%al

25.00 : 7ee24: 75 0d jne 7ee33

25.00 : 7ee26: 48 ff c7 inc %rdi

0.00 : 7ee29: 48 ff c6 inc %rsi

0.00 : 7ee2c: 84 c0 test %al,%al

0.00 : 7ee2e: 75 f0 jne 7ee20

0.00 : 7ee30: 31 c0 xor %eax,%eax

0.00 : 7ee32: c3 retq

0.00 : 7ee33: b8 01 00 00 00 mov $0x1,%eax

0.00 : 7ee38: b9 ff ff ff ff mov $0xffffffff,%ecx

0.00 : 7ee3d: 0f 42 c1 cmovb %ecx,%eax

0.00 : 7ee40: c3 retq

Without the debug info in the object code, just the annotated assember is displayed.

To see which events one can trace with, use the perf list command:

./perf list

List of pre-defined events (to be used in -e):

cpu-cycles OR cycles [Hardware event]

instructions [Hardware event]

cache-references [Hardware event]

cache-misses [Hardware event]

branch-instructions OR branches [Hardware event]

branch-misses [Hardware event]

bus-cycles [Hardware event]

cpu-clock [Software event]

task-clock [Software event]

page-faults OR faults [Software event]

minor-faults [Software event]

major-faults [Software event]

context-switches OR cs [Software event]

cpu-migrations OR migrations [Software event]

L1-dcache-loads [Hardware cache event]

L1-dcache-load-misses [Hardware cache event]

...

...

sched:sched_migrate_task [Tracepoint event]

sched:sched_process_free [Tracepoint event]

sched:sched_process_exit [Tracepoint event]

sched:sched_process_wait [Tracepoint event]

sched:sched_process_fork [Tracepoint event]

sched:sched_signal_send [Tracepoint event]

On my system I have over 120 different types of events that can be monitored. One can select events to monitor using the -e event option, e.g.:

./perf stat -e L1-dcache-loads -e instructions dd if=/dev/zero of=/dev/null bs=1M count=1

1+0 records in

1+0 records out

1048576 bytes (1.0 MB) copied, 0.000784806 s, 1.3 GB/s

Performance counter stats for 'dd if=/dev/zero of=/dev/null bs=1M count=1':

1166059 L1-dcache-loads # inf M/sec

4283970 instructions # inf IPC

0.003090599 seconds time elapsed

This is one powerful too! I recommend reading the documentation and trying it out for yourself on a 2.6.31 kernel.

References: http://lkml.org/lkml/2009/8/4/346

Wednesday, 4 November 2009

Gnome Panel Easter Egg

Found another Gnome Easter Egg today. Right click on an empty region in a gnome panel (top or bottom) and select Properties. Then right click 3 times on the General or Background tabs and you get an animated GEGL appearing thus:

..if you are wondering, the 5 legged goat is the GEGL mascot as created by George Lebl. Weird.

..if you are wondering, the 5 legged goat is the GEGL mascot as created by George Lebl. Weird.

Tuesday, 3 November 2009

QEMU + EFI BIOS

I've been poking around with the Extensible Firmware Interface (EFI) and discovered that Tristan Gingold has kindly ported an EFI BIOS from the TianoCore project to QEMU.

The EFI tarball at the QEMU website contains an EFI BIOS image and a bootable linux image that uses the elilo boot loader. I simply grabbed the tarball, unpacked it and moved the EFI bios.bin to /usr/share/qemu/bios-efi.bin to make sure I don't get confused between BIOS filenames.

Then I booted using the EFI BIOS and EFI enabled linux disk image:

qemu qemu -bios bios-efi.bin -hda efi.disk

Here is the initial boot screen:

And here's the EFI command prompt:

So if you want to get to play with EFI, here's a great virtualized playground for one to experiment with. Kudos to TianoCore, Tristan Gingold and QEMU!

The EFI tarball at the QEMU website contains an EFI BIOS image and a bootable linux image that uses the elilo boot loader. I simply grabbed the tarball, unpacked it and moved the EFI bios.bin to /usr/share/qemu/bios-efi.bin to make sure I don't get confused between BIOS filenames.

Then I booted using the EFI BIOS and EFI enabled linux disk image:

qemu qemu -bios bios-efi.bin -hda efi.disk

Here is the initial boot screen:

And here's the EFI command prompt:

So if you want to get to play with EFI, here's a great virtualized playground for one to experiment with. Kudos to TianoCore, Tristan Gingold and QEMU!

Sunday, 1 November 2009

Suppress 60 second delay in Logout/Restart/Shutdown

With Ubuntu 9.10 Karmic Koala, there is a 60 second confirmation delay when logging out, restarting or shutting down. This default can be over-ridden to act instantly rather than waiting for a confirmation (and 60 second timeout) by setting the apps/indicator-session/suppress_logout_restart_shutdown boolean to true as follows:

gconftool-2 -s '/apps/indicator-session /suppress_logout_restart_shutdown' --type bool true

(or use gconf-editor to do this if you want to use a GUI based tool).

I suspect the rationale behind the 60 second confirmation delay is just in case one selects logout, restart or shutdown accidentally and in doing so, one has the ability to cancel this before accidentally closing one's session. So beware if you use this tweak - it assumes you really want to instantly logout, restart or shutdown!

gconftool-2 -s '/apps/indicator-session /suppress_logout_restart_shutdown' --type bool true

(or use gconf-editor to do this if you want to use a GUI based tool).

I suspect the rationale behind the 60 second confirmation delay is just in case one selects logout, restart or shutdown accidentally and in doing so, one has the ability to cancel this before accidentally closing one's session. So beware if you use this tweak - it assumes you really want to instantly logout, restart or shutdown!

Thursday, 29 October 2009

Vista, Windows 7, Ubuntu 9.04 and 9.10 boot speed comparison

Nice demo here on tuxradar showing Vista, Windows 7, Ubuntu 9.04 and Ubuntu 9.10 booting all at the same time. I won't tell you who wins, just follow the link.. :-)

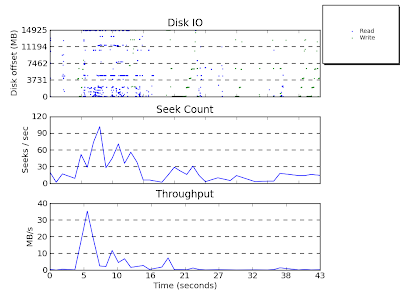

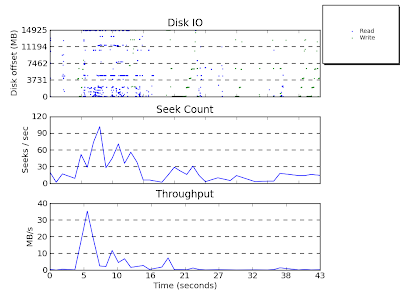

blktrace graphs revisited

I blogged about seekwatcher a couple of days ago - it's great for producing charts from blktrace - however, I wasn't totally happy with the graph output. I wanted to be able to graph the data based on a tunable sample rate. So I've hacked up a script using awk and gnuplot to do this.

To run, suppose I've generated blktrace files readtest.blktrace.0 and readtest.blktrace.1 and I want a graph generated with 0.25 seconds per point, then do:

./generate-read-graph blktrace readtest.blktrace. 0.25

This generates a gnuplot file graph-reads.png, for example:

Now I'm happier with these results.

To run, suppose I've generated blktrace files readtest.blktrace.0 and readtest.blktrace.1 and I want a graph generated with 0.25 seconds per point, then do:

./generate-read-graph blktrace readtest.blktrace. 0.25

This generates a gnuplot file graph-reads.png, for example:

Tuesday, 27 October 2009

Looking at free extent sizes on ext2, ext3 and ext4 filesystems

Today I stumbled on the wonders of e2freefrag, a tool for reporting the free space fragmentation on ext[2-4] filesystems. It scans the block bitmap data and reports the amount of free blocks a present in terms of free contiguous blocks and also aligned free space.

$ sudo e2freefrag /dev/sda1

Device: /dev/sda1

Blocksize: 1024 bytes

Total blocks: 489951

Free blocks: 242944 (49.6%)

Min. free extent: 1 KB

Max. free extent: 7676 KB

Avg. free extent: 2729 KB

HISTOGRAM OF FREE EXTENT SIZES:

Extent Size Range : Free extents Free Blocks Percent

1K... 2K- : 4 4 0.00%

2K... 4K- : 30 90 0.04%

4K... 8K- : 5 26 0.01%

128K... 256K- : 2 301 0.12%

256K... 512K- : 1 340 0.14%

512K... 1024K- : 6 4540 1.87%

1M... 2M- : 5 8141 3.35%

2M... 4M- : 5 14751 6.07%

4M... 8M- : 31 214751 88.40%

From this one can get an idea of the level of free space fragmentation in your filesystem.

$ sudo e2freefrag /dev/sda1

Device: /dev/sda1

Blocksize: 1024 bytes

Total blocks: 489951

Free blocks: 242944 (49.6%)

Min. free extent: 1 KB

Max. free extent: 7676 KB

Avg. free extent: 2729 KB

HISTOGRAM OF FREE EXTENT SIZES:

Extent Size Range : Free extents Free Blocks Percent

1K... 2K- : 4 4 0.00%

2K... 4K- : 30 90 0.04%

4K... 8K- : 5 26 0.01%

128K... 256K- : 2 301 0.12%

256K... 512K- : 1 340 0.14%

512K... 1024K- : 6 4540 1.87%

1M... 2M- : 5 8141 3.35%

2M... 4M- : 5 14751 6.07%

4M... 8M- : 31 214751 88.40%

From this one can get an idea of the level of free space fragmentation in your filesystem.

BBC article on Ubuntu Karmic Koala

The BBC interviews Canonical's Chris Kenyon about Ubuntu 9.10 Karmic Koala. It's great to see the BBC helping to raise the profile of Ubuntu! Nice one.

Monday, 26 October 2009

blktrace based tools - seekwatcher

Previously I blogged about blktrace and how it can be used to analyse block I/O operations - however, it can generate a lot of data that can be overwhelming. This is where Chris Mason's Seekwatcher tool comes to the rescue. Seekwatcher uses blktrace data to generate graphs to help one visualise and understand I/O patterns. It allows one to plot multiple blktrace runs together to enable easy comparison between benchmarking test runs.

It requires matplotlib, python and the numpy module - on Ubuntu download and install these packages using:

sudo apt-get install python python-matplotlib python-numpy

and then get the seekwatcher source and extract seekwatcher from the source package and you are ready to run the seekwatcher python script.

Seekwatcher also can general animations of I/O patterns which also improves visualisation and understanding of I/O operations over time.

To use seekwacher, first start a blktrace capture:

blktrace -o trace -d /dev/sda

next kick off the test you want to analyse and when that's complete, kill blktrace. Next run seekwatcher on the blktrace output:

seekwatcher -t trace.blktrace -o output.png

..and this generates a png file output.png. Easy!

Attached is the output from a test I just ran on my HP Mini 1000 starting up the Open Office word processor:

One can generate a movie from the same data using:

One can generate a movie from the same data using:

seekwatcher -t trace.blktrace -o open-office.mpg --movie

The generated movie is below:

There are more instructions on other ways to use seekwatcher on the seekwatcher webpage. All in all, a very handy tool - kudos to Chris Mason.

It requires matplotlib, python and the numpy module - on Ubuntu download and install these packages using:

sudo apt-get install python python-matplotlib python-numpy

and then get the seekwatcher source and extract seekwatcher from the source package and you are ready to run the seekwatcher python script.

Seekwatcher also can general animations of I/O patterns which also improves visualisation and understanding of I/O operations over time.

To use seekwacher, first start a blktrace capture:

blktrace -o trace -d /dev/sda

next kick off the test you want to analyse and when that's complete, kill blktrace. Next run seekwatcher on the blktrace output:

seekwatcher -t trace.blktrace -o output.png

..and this generates a png file output.png. Easy!

Attached is the output from a test I just ran on my HP Mini 1000 starting up the Open Office word processor:

One can generate a movie from the same data using:

One can generate a movie from the same data using:seekwatcher -t trace.blktrace -o open-office.mpg --movie

The generated movie is below:

There are more instructions on other ways to use seekwatcher on the seekwatcher webpage. All in all, a very handy tool - kudos to Chris Mason.

Saturday, 24 October 2009

Block I/O Layer Tracing using blktrace

blktrace is a really useful tool to see what I/O operations are going on inside the Linux block I/O layer. So what does blktrace provide:

One the user-space side, two tools are used: blktrace which is the event extraction utility and blkparse which takes the event data and turns it into human readable output.

Typically, one uses the tools as follows:

sudo blktrace -d /dev/sda -o - | blkparse -i -

And this will dump out data as follows:

8,0 0 1 0.000000000 1245 A W 25453095 + 8 <- (8,1) 25453032

8,0 0 2 0.000002374 1245 Q W 25453095 + 8 [postgres]

8,0 0 3 0.000010616 1245 G W 25453095 + 8 [postgres]

8,0 0 4 0.000018228 1245 P N [postgres]

8,0 0 5 0.000023397 1245 I W 25453095 + 8 [postgres]

8,0 0 6 0.000034222 1245 A W 25453103 + 8 <- (8,1) 25453040

8,0 0 7 0.000035968 1245 Q W 25453103 + 8 [postgres]

8,0 0 8 0.000040368 1245 M W 25453103 + 8 [postgres]

The 1st column shows the device major,minor tuple, e.g. (8,0). The 2nd column shows the CPU number. The 3rd column shows the sequence number. 4th column is the time stamp, which as you can see has a fairly high resolution time stamp. The 5th column is the PID of the process issuing the I/O request (in this example, 1245, the PID of postgres). The 6th column shows the event type, e.g. 'A' means a remapping from device (8,1) /dev/sda1 to device (8,0) /dev/sda, refer to the "ACTION IDENTIFIERS" section in the blkparse man page for more details on this field. The 7th column is R for Read, W for Write, D for block, B for Barrier operation. The next field is the block number and a following + number is the number of blocks requested. The final field between the [ ] brackets is the process name of the process issuing the request.

When one wants to stop tracing, hit control-C and a summary of the I/O operations is provided, e.g.:

CPU0 (8,0):

Reads Queued: 0, 0KiB Writes Queued: 128, 504KiB

Read Dispatches: 0, 0KiB Write Dispatches: 22, 508KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 32, 576KiB

Read Merges: 0, 0KiB Write Merges: 105, 420KiB

Read depth: 12 Write depth: 2

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 9, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 32

IO unplugs: 18 Timer unplugs: 0

CPU1 (8,0):

Reads Queued: 0, 0KiB Writes Queued: 16, 56KiB

Read Dispatches: 0, 0KiB Write Dispatches: 2, 52KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 0, 0KiB

Read Merges: 0, 0KiB Write Merges: 11, 44KiB

Read depth: 12 Write depth: 2

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 3, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 0

IO unplugs: 6 Timer unplugs: 0

Total (8,0):

Reads Queued: 0, 0KiB Writes Queued: 144, 560KiB

Read Dispatches: 0, 0KiB Write Dispatches: 24, 560KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 32, 576KiB

Read Merges: 0, 0KiB Write Merges: 116, 464KiB

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 12, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 32

IO unplugs: 24 Timer unplugs: 0

Throughput (R/W): 0KiB/s / 537KiB/s

Events (8,0): 576 entries

Skips: 0 forward (0 - 0.0%)

As tools go, this one is excellent for in-depth understanding of block I/O operations inside the kernel. I am sure it has many different applications and it's well worth playing with this tool to get familiar with all of the features provided. The ability to filter specific events allows one to focus and drill down on specific types of I/O operations without being buried by tracing output overload.

Jens Axboe the block layer maintainer developed and maintains blktrace. Alan D. Brunelle has contributed a lot of extra functionality - I recommend reading Brunelle's user guide to get started and also blktrace paper that contains a lot more indepth instruction on how to use this tool. The blktrace and blkparse manual pages provide more details on how to use the tools, but I'd recommend eyeballing Brunelle's user guide first.

- plenty of block layer information on I/O operations

- very low level (2%) overhead when tracing

- highly configurable - trace I/O on one or several devices, selectable filter events

- live and playback tracing

One the user-space side, two tools are used: blktrace which is the event extraction utility and blkparse which takes the event data and turns it into human readable output.

Typically, one uses the tools as follows:

sudo blktrace -d /dev/sda -o - | blkparse -i -

And this will dump out data as follows:

8,0 0 1 0.000000000 1245 A W 25453095 + 8 <- (8,1) 25453032

8,0 0 2 0.000002374 1245 Q W 25453095 + 8 [postgres]

8,0 0 3 0.000010616 1245 G W 25453095 + 8 [postgres]

8,0 0 4 0.000018228 1245 P N [postgres]

8,0 0 5 0.000023397 1245 I W 25453095 + 8 [postgres]

8,0 0 6 0.000034222 1245 A W 25453103 + 8 <- (8,1) 25453040

8,0 0 7 0.000035968 1245 Q W 25453103 + 8 [postgres]

8,0 0 8 0.000040368 1245 M W 25453103 + 8 [postgres]

The 1st column shows the device major,minor tuple, e.g. (8,0). The 2nd column shows the CPU number. The 3rd column shows the sequence number. 4th column is the time stamp, which as you can see has a fairly high resolution time stamp. The 5th column is the PID of the process issuing the I/O request (in this example, 1245, the PID of postgres). The 6th column shows the event type, e.g. 'A' means a remapping from device (8,1) /dev/sda1 to device (8,0) /dev/sda, refer to the "ACTION IDENTIFIERS" section in the blkparse man page for more details on this field. The 7th column is R for Read, W for Write, D for block, B for Barrier operation. The next field is the block number and a following + number is the number of blocks requested. The final field between the [ ] brackets is the process name of the process issuing the request.

When one wants to stop tracing, hit control-C and a summary of the I/O operations is provided, e.g.:

CPU0 (8,0):

Reads Queued: 0, 0KiB Writes Queued: 128, 504KiB

Read Dispatches: 0, 0KiB Write Dispatches: 22, 508KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 32, 576KiB

Read Merges: 0, 0KiB Write Merges: 105, 420KiB

Read depth: 12 Write depth: 2

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 9, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 32

IO unplugs: 18 Timer unplugs: 0

CPU1 (8,0):

Reads Queued: 0, 0KiB Writes Queued: 16, 56KiB

Read Dispatches: 0, 0KiB Write Dispatches: 2, 52KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 0, 0KiB

Read Merges: 0, 0KiB Write Merges: 11, 44KiB

Read depth: 12 Write depth: 2

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 3, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 0

IO unplugs: 6 Timer unplugs: 0

Total (8,0):

Reads Queued: 0, 0KiB Writes Queued: 144, 560KiB

Read Dispatches: 0, 0KiB Write Dispatches: 24, 560KiB

Reads Requeued: 0 Writes Requeued: 0

Reads Completed: 0, 0KiB Writes Completed: 32, 576KiB

Read Merges: 0, 0KiB Write Merges: 116, 464KiB

PC Reads Queued: 0, 0KiB PC Writes Queued: 0, 0KiB

PC Read Disp.: 12, 0KiB PC Write Disp.: 0, 0KiB

PC Reads Req.: 0 PC Writes Req.: 0

PC Reads Compl.: 0 PC Writes Compl.: 32

IO unplugs: 24 Timer unplugs: 0

Throughput (R/W): 0KiB/s / 537KiB/s

Events (8,0): 576 entries

Skips: 0 forward (0 - 0.0%)

As tools go, this one is excellent for in-depth understanding of block I/O operations inside the kernel. I am sure it has many different applications and it's well worth playing with this tool to get familiar with all of the features provided. The ability to filter specific events allows one to focus and drill down on specific types of I/O operations without being buried by tracing output overload.

Jens Axboe the block layer maintainer developed and maintains blktrace. Alan D. Brunelle has contributed a lot of extra functionality - I recommend reading Brunelle's user guide to get started and also blktrace paper that contains a lot more indepth instruction on how to use this tool. The blktrace and blkparse manual pages provide more details on how to use the tools, but I'd recommend eyeballing Brunelle's user guide first.

Thursday, 22 October 2009

Automounting Filesystems in user space using afuse

Afuse is a useful utility to allow one to automount filesystems using FUSE. Below is a nifty hack shown to me by Jeremy Kerr to automount directories on remote machines using sshfs:

sudo apt-get install sshfs afuse

mkdir ~/sshfs

afuse -o mount_template="sshfs %r:/ %m" \

-o unmount_template="fusermount -u -z %m" ~/sshfs/

cat ~/sshfs/king@server1.local/etc/hostname

server1

Ben Martin has documented afuse and some useful examples here.

sudo apt-get install sshfs afuse

mkdir ~/sshfs

afuse -o mount_template="sshfs %r:/ %m" \

-o unmount_template="fusermount -u -z %m" ~/sshfs/

cat ~/sshfs/king@server1.local/etc/hostname

server1

Ben Martin has documented afuse and some useful examples here.

Wednesday, 21 October 2009

Disabling Gnome Terminal Blinking Cursor

Looking at powertop, an idle Gnome Terminal is waking up around twice a second just to animate the blinking cursor. While this is a minimal amount of wake-ups a second, it still sucks power and also I find a blinky cursor a little annoying. To disable cursor blink for the Default profile run gconf-editor and set /apps/gnome-terminal/profiles/Default/cursor_blink_mode to off.

Tuesday, 20 October 2009

Makerbot - an open source 3D printer

This week I'm attending a Ubuntu Kernel Sprint and my colleague Steve Conklin brought along a fantastic gizmo - the Makerbot 3D printer. It is most fascinating watching it print 3D objects by extruding a thin ABS plastic trail of plastic in layers. It can print objects up to 4" x 4" x 6" - the imagination is the limiting factor to what it can print. Steve already demo'd it printing a variety of objects, the most impressive being a working whistle including a moving ball inside the whistle. It's not too slow either - it took about 25 minutes to print the whistle, which isn't bad considering the complexity and size of the object.

It's a cool piece of kit - doubly so because it's completely open sourced.

It's a cool piece of kit - doubly so because it's completely open sourced.

System Management Mode is Evil

System Management Mode (SMM) is an evil thing. It's a feature that was introduced on the Intel 386SL and allows an operating system to be interrupted and normal execution to be temporarily suspended to execute SMM code at a very high priviledge level. It's normally configured at boot-time by the BIOS and the OS has zero knowledge about it.

The System Management Interrupt (SMI) causes the CPU to enter system management mode, usually by:

SMM cannot be masked or overridden which mean an OS has no way of avoiding being interrupted by the SMI. The SMI will steal CPU cycles and modify CPU state - state is saved and restored using System Management RAM (SMRAM) and apparently the write-back caches have to be flushed to enter SMM. This can mess up real-time performance by adding in hidden latencies which the OS cannot block. CPU cycles are consumed and hence Time Stamp Counter (TSC) skewing occurs relative to the OS's view of CPU timing and generally the OS cannot account for the lost cycles. One also has to rely on the SMM code being written correctly and not interfering with the state of the OS - weird un-explicable problems may occur if the SMM code is buggy.

By monitoring the TSC one can detect if a system is has entered SMM. In face, Jon Masters had written a Linux module to do this.

Processors such as the MediaGX (from which the Geode was derived) used SMM to emulate real hardware such as VGA and the Sound Blaster audio, which is a novel solution, but means that one cannot reliably do any real-time work on this processor.

The worrying feature of SMM is that it can be exploited and used for rootkits - it's hard to detect and one cannot block it. Doing things behind an OS's back without it knowing and in a way that messes with critical timing and can lead to rootkit exploits is just plain evil in my opinion. If it was up to me, I'd ban the use of it completely.

For those who are interested at looking at an implementation of a SMM handing code, coreboot has some well written and commented code in src/cpu/x86/smm. Well worth eyeballing. Phrack has some useful documentation on SMM and generating SMI interrupts, and mjg59 has an article that shows an ACPI table that generates SMI interrupts by writing to port 0xb2.

Footnote: Even Intel admits SMIs are unpleasant. The Intel Integrated Graphics Device OpRegion Specification (section 1.4) states:

"SMIs are particularly problematic since they switch the processor into system management mode (SMM), which has a high context switch cost (1). The transition to SMM is also invisible to the OS and may involve large amounts of processing before resuming normal operation. This can lead to bad behavior like video playback skipping, network packet loss due to timeouts, and missed deadlines for OS timers, which require high precision."

The System Management Interrupt (SMI) causes the CPU to enter system management mode, usually by:

- The processor being configured to generate a SMI on a write to a predefined I/O address.

- Signalling on a pre-defined pin on the CPU

- Access to a predefined I/O port (port 0xB2 is generally used)

SMM cannot be masked or overridden which mean an OS has no way of avoiding being interrupted by the SMI. The SMI will steal CPU cycles and modify CPU state - state is saved and restored using System Management RAM (SMRAM) and apparently the write-back caches have to be flushed to enter SMM. This can mess up real-time performance by adding in hidden latencies which the OS cannot block. CPU cycles are consumed and hence Time Stamp Counter (TSC) skewing occurs relative to the OS's view of CPU timing and generally the OS cannot account for the lost cycles. One also has to rely on the SMM code being written correctly and not interfering with the state of the OS - weird un-explicable problems may occur if the SMM code is buggy.

By monitoring the TSC one can detect if a system is has entered SMM. In face, Jon Masters had written a Linux module to do this.

Processors such as the MediaGX (from which the Geode was derived) used SMM to emulate real hardware such as VGA and the Sound Blaster audio, which is a novel solution, but means that one cannot reliably do any real-time work on this processor.

The worrying feature of SMM is that it can be exploited and used for rootkits - it's hard to detect and one cannot block it. Doing things behind an OS's back without it knowing and in a way that messes with critical timing and can lead to rootkit exploits is just plain evil in my opinion. If it was up to me, I'd ban the use of it completely.

For those who are interested at looking at an implementation of a SMM handing code, coreboot has some well written and commented code in src/cpu/x86/smm. Well worth eyeballing. Phrack has some useful documentation on SMM and generating SMI interrupts, and mjg59 has an article that shows an ACPI table that generates SMI interrupts by writing to port 0xb2.

Footnote: Even Intel admits SMIs are unpleasant. The Intel Integrated Graphics Device OpRegion Specification (section 1.4) states:

"SMIs are particularly problematic since they switch the processor into system management mode (SMM), which has a high context switch cost (1). The transition to SMM is also invisible to the OS and may involve large amounts of processing before resuming normal operation. This can lead to bad behavior like video playback skipping, network packet loss due to timeouts, and missed deadlines for OS timers, which require high precision."

Friday, 16 October 2009

Always read the README file..

You know how it is, you get some source code and you fiddle around with it and try and make it build, install, work.. and then after wasting some time you eventually get around to consulting the README file. Well, some README files are well worth reading, here is the contents of the gnome-cups-manager README file... very amusing.

gnome-cups-manager

------------------

Once upon a time there was a printer who lived in the woods. He was a lonely printer, because nobody knew how to configure him. He hoped and hoped for someone to play with.

One day, the wind passed by the printer's cottage. "Whoosh," said the wind. The printer became excited. Maybe the wind would be his friend!

"Will you be my friend?" the printer asked.

"Whoosh," said the wind.

"What does that mean?" asked the printer.

"Whoosh," said the wind, and with that it was gone.

The printer was confused. He spent the rest of the day thinking and jamming paper (for that is what little printers do when they are confused).

The next day a storm came. The rain came pouring down, darkening the morning sky and destroying the printer's garden. The little printer was upset. "Why are you being so mean to me?" he asked.

"Pitter Patter, Pitter Patter," said the rain.

"Will you be my friend?" the printer asked shyly.

"Pitter Patter, Pitter Patter," said the rain, and then it left and the sun came out.

The printer was sad. He spent the rest of the day inside, sobbing and blinking lights cryptically (for that is what little printers do when they are sad).

Then one day, a little girl stumbled into the printer's clearing in the woods. The printer looked at this curious sight. He didn't know what to think.

The little girl looked up at him. "Will you be my friend?" she asked.

"Yes," said the printer.

"What is your name?" asked the little girl.

"HP 4100TN", replied the printer.

"My name is gnome-cups-manager" said the little girl.

The printer was happy. He spent the rest of the day playing games and printing documents, for that is what little printers do when they are happy.

Note that this text is covered by the GNU GENERAL PUBLIC LICENSE, Version 2, June 1991.

gnome-cups-manager

------------------

Once upon a time there was a printer who lived in the woods. He was a lonely printer, because nobody knew how to configure him. He hoped and hoped for someone to play with.

One day, the wind passed by the printer's cottage. "Whoosh," said the wind. The printer became excited. Maybe the wind would be his friend!

"Will you be my friend?" the printer asked.

"Whoosh," said the wind.

"What does that mean?" asked the printer.

"Whoosh," said the wind, and with that it was gone.

The printer was confused. He spent the rest of the day thinking and jamming paper (for that is what little printers do when they are confused).

The next day a storm came. The rain came pouring down, darkening the morning sky and destroying the printer's garden. The little printer was upset. "Why are you being so mean to me?" he asked.

"Pitter Patter, Pitter Patter," said the rain.

"Will you be my friend?" the printer asked shyly.

"Pitter Patter, Pitter Patter," said the rain, and then it left and the sun came out.

The printer was sad. He spent the rest of the day inside, sobbing and blinking lights cryptically (for that is what little printers do when they are sad).

Then one day, a little girl stumbled into the printer's clearing in the woods. The printer looked at this curious sight. He didn't know what to think.

The little girl looked up at him. "Will you be my friend?" she asked.

"Yes," said the printer.

"What is your name?" asked the little girl.

"HP 4100TN", replied the printer.

"My name is gnome-cups-manager" said the little girl.

The printer was happy. He spent the rest of the day playing games and printing documents, for that is what little printers do when they are happy.

Note that this text is covered by the GNU GENERAL PUBLIC LICENSE, Version 2, June 1991.

Disabling terminal bell in bash and vim

The terminal bell sound generated in bash and vim is rather annoying to myself and is an audible alert to my colleagues that I'm typing badly too. So how does one disable it?

The terminal bell sound generated in bash and vim is rather annoying to myself and is an audible alert to my colleagues that I'm typing badly too. So how does one disable it?Open ~/.inputrc and add the following line:

set bell-style none

To disable the bell in the vim editor, edit ~/.vimrc and add the following line:

set vb

Ah - silence again! That's one less distraction to contend with!

Thursday, 15 October 2009

Encrypted Private Directory

One neat feature that's been in Ubuntu since Intrepid Ibex is the ability to encrypt a private directory. This allows one to put sensitive data, such as one's ssh and gnupg keys as well as data such as email into an encrypted directory. Thanks to Dustin Kirkland for writing up how to do this.

Basically, an encrypted Private directory is created and one can move the directories you want to be encrypted into this directory. Then one create symbolic links to these files in the Private directory. At login time, the encrypted Private directory is automatically mounted and also unmounted at logout time.

Note that hibernating is not a good idea with this solution, since private data in memory is written out to swap, which is not encrypted.

Basically, an encrypted Private directory is created and one can move the directories you want to be encrypted into this directory. Then one create symbolic links to these files in the Private directory. At login time, the encrypted Private directory is automatically mounted and also unmounted at logout time.

Note that hibernating is not a good idea with this solution, since private data in memory is written out to swap, which is not encrypted.

2009 Linux Plumber's Conference Videos

For those of you who could not attend the 2009 Linux Plumbers Conference in Portland last month, the Linux Foundation has now uploaded a whole bunch of videos of some of the talks, so you can now attend the conference from the comfort of your computer.

Linus's Advanced Git Tutorial (below) is an example of the kind of content available.

Below is a pretty impressive demo of Wayland, a new display manager for Linux - it's a great example of the way compositing should be. Well worth viewing..

I'm glad these talks got videoed, as I was unable to attend some of them because I was attending other talks in parallel tracks.

Linus's Advanced Git Tutorial (below) is an example of the kind of content available.

Below is a pretty impressive demo of Wayland, a new display manager for Linux - it's a great example of the way compositing should be. Well worth viewing..

I'm glad these talks got videoed, as I was unable to attend some of them because I was attending other talks in parallel tracks.

Wednesday, 14 October 2009

Multi-touch input demo

The 10/GUI project has a crisp video (below) of a multi-touch desktop user interface illustrating the downside in the single point mouse orientated GUI compared to the advantages of the multi-touch GUI.

I quite like the presentation, however I'm not sure how this maps onto a laptop where touchpad and keyboard real estate is limited. I also feel that it won't work out well with users who have had a bad accident with a hedge strimmer and have a few less fingers to use.

Anyhow, it's an interim solution; in 25 years time we will be controlling the computer with out any physical user interface as thought control will be the norm... ;-) Perhaps this should be called the 0/GUI as it require any finger digits at all.

I quite like the presentation, however I'm not sure how this maps onto a laptop where touchpad and keyboard real estate is limited. I also feel that it won't work out well with users who have had a bad accident with a hedge strimmer and have a few less fingers to use.

Anyhow, it's an interim solution; in 25 years time we will be controlling the computer with out any physical user interface as thought control will be the norm... ;-) Perhaps this should be called the 0/GUI as it require any finger digits at all.

Monday, 12 October 2009

Dumping ACPI tables using acpidump and acpixtract

Most of the time when I'm looking at BIOS issues I just look at the disassembled ACPI DSDT using the following runes:

$ sudo cat /proc/acpi/dsdt > dstd.dat

$ iasl -d dstd.dat

..and look at the disassembly in dstd.dsl