|

| The stress-ng logo |

The cyclic test can be configured to specify the sleep time (in nanoseconds), the scheduling type (rr or fifo), the scheduling priority (1 to 100) and also the sleep method (explained later).

The first 10,000 latency measurements are used to compute various latency statistics:

- mean latency (aka the 'average')

- modal latency (the most 'popular' latency)

- minimum latency

- maximum latency

- standard deviation

- latency percentiles (25%, 50%, 75%, 90%, 95.40%, 99.0%, 99.5%, 99.9% and 99.99%

- latency distribution (enabled with the --cyclic-dist option)

The latency distribution is shown when the --cyclic-dist option is used; one has to specify the distribution interval in nanoseconds and up to the first 100 values in the distribution are output.

For an idle machine, one can invoke just the cyclic measurements with stress-ng as follows:

sudo stress-ng --cyclic 1 --cyclic-policy fifo \

--cyclic-prio 100 --cyclic-method --clock_ns \

--cyclic-sleep 20000 --cyclic-dist 1000 -t 5

stress-ng: info: [27594] dispatching hogs: 1 cyclic

stress-ng: info: [27595] stress-ng-cyclic: sched SCHED_FIFO: 20000 ns delay, 10000 samples

stress-ng: info: [27595] stress-ng-cyclic: mean: 5242.86 ns, mode: 4880 ns

stress-ng: info: [27595] stress-ng-cyclic: min: 3050 ns, max: 44818 ns, std.dev. 1142.92

stress-ng: info: [27595] stress-ng-cyclic: latency percentiles:

stress-ng: info: [27595] stress-ng-cyclic: 25.00%: 4881 us

stress-ng: info: [27595] stress-ng-cyclic: 50.00%: 5191 us

stress-ng: info: [27595] stress-ng-cyclic: 75.00%: 5261 us

stress-ng: info: [27595] stress-ng-cyclic: 90.00%: 5368 us

stress-ng: info: [27595] stress-ng-cyclic: 95.40%: 6857 us

stress-ng: info: [27595] stress-ng-cyclic: 99.00%: 8942 us

stress-ng: info: [27595] stress-ng-cyclic: 99.50%: 9821 us

stress-ng: info: [27595] stress-ng-cyclic: 99.90%: 22210 us

stress-ng: info: [27595] stress-ng-cyclic: 99.99%: 36074 us

stress-ng: info: [27595] stress-ng-cyclic: latency distribution (1000 us intervals):

stress-ng: info: [27595] stress-ng-cyclic: latency (us) frequency

stress-ng: info: [27595] stress-ng-cyclic: 0 0

stress-ng: info: [27595] stress-ng-cyclic: 1000 0

stress-ng: info: [27595] stress-ng-cyclic: 2000 0

stress-ng: info: [27595] stress-ng-cyclic: 3000 82

stress-ng: info: [27595] stress-ng-cyclic: 4000 3342

stress-ng: info: [27595] stress-ng-cyclic: 5000 5974

stress-ng: info: [27595] stress-ng-cyclic: 6000 197

stress-ng: info: [27595] stress-ng-cyclic: 7000 209

stress-ng: info: [27595] stress-ng-cyclic: 8000 100

stress-ng: info: [27595] stress-ng-cyclic: 9000 50

stress-ng: info: [27595] stress-ng-cyclic: 10000 10

stress-ng: info: [27595] stress-ng-cyclic: 11000 9

stress-ng: info: [27595] stress-ng-cyclic: 12000 2

stress-ng: info: [27595] stress-ng-cyclic: 13000 2

stress-ng: info: [27595] stress-ng-cyclic: 14000 1

stress-ng: info: [27595] stress-ng-cyclic: 15000 9

stress-ng: info: [27595] stress-ng-cyclic: 16000 1

stress-ng: info: [27595] stress-ng-cyclic: 17000 1

stress-ng: info: [27595] stress-ng-cyclic: 18000 0

stress-ng: info: [27595] stress-ng-cyclic: 19000 0

stress-ng: info: [27595] stress-ng-cyclic: 20000 0

stress-ng: info: [27595] stress-ng-cyclic: 21000 1

stress-ng: info: [27595] stress-ng-cyclic: 22000 1

stress-ng: info: [27595] stress-ng-cyclic: 23000 0

stress-ng: info: [27595] stress-ng-cyclic: 24000 1

stress-ng: info: [27595] stress-ng-cyclic: 25000 2

stress-ng: info: [27595] stress-ng-cyclic: 26000 0

stress-ng: info: [27595] stress-ng-cyclic: 27000 1

stress-ng: info: [27595] stress-ng-cyclic: 28000 1

stress-ng: info: [27595] stress-ng-cyclic: 29000 2

stress-ng: info: [27595] stress-ng-cyclic: 30000 0

stress-ng: info: [27595] stress-ng-cyclic: 31000 0

stress-ng: info: [27595] stress-ng-cyclic: 32000 0

stress-ng: info: [27595] stress-ng-cyclic: 33000 0

stress-ng: info: [27595] stress-ng-cyclic: 34000 0

stress-ng: info: [27595] stress-ng-cyclic: 35000 0

stress-ng: info: [27595] stress-ng-cyclic: 36000 1

stress-ng: info: [27595] stress-ng-cyclic: 37000 0

stress-ng: info: [27595] stress-ng-cyclic: 38000 0

stress-ng: info: [27595] stress-ng-cyclic: 39000 0

stress-ng: info: [27595] stress-ng-cyclic: 40000 0

stress-ng: info: [27595] stress-ng-cyclic: 41000 0

stress-ng: info: [27595] stress-ng-cyclic: 42000 0

stress-ng: info: [27595] stress-ng-cyclic: 43000 0

stress-ng: info: [27595] stress-ng-cyclic: 44000 1

stress-ng: info: [27594] successful run completed in 5.00s

Note that stress-ng needs to be invoked using sudo to enable the Real Time FIFO scheduling for the cyclic measurements.

The above example uses the following options:

- --cyclic 1

- starts one instance of the cyclic measurements (1 is always recommended)

- --cyclic-policy fifo

- use the real time First-In-First-Out scheduling for the cyclic measurements

- --cyclic-prio 100

- use the maximum scheduling priority

- --cyclic-method clock_ns

- use the clock_nanoseconds(2) system call to perform the high precision duration sleep

- --cyclic-sleep 20000

- sleep for 20000 nanoseconds per cyclic iteration

- --cyclic-dist 1000

- enable latency distribution statistics with an interval of 1000 nanoseconds between each data point.

- -t 5

- run for just 5 seconds

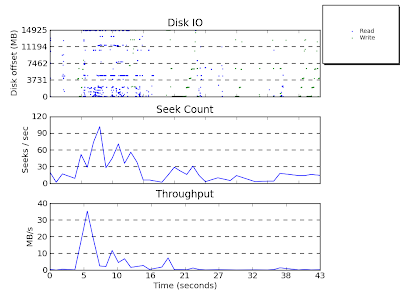

Now for the interesting part. Since stress-ng is packed with many different stressors we can run these while performing the cyclic measurements, for example, we can tell stress-ng to run *all* the virtual memory related stress tests and see how this affects the latency distribution using the following:

sudo stress-ng --cyclic 1 --cyclic-policy fifo \

--cyclic-prio 100 --cyclic-method clock_ns \

--cyclic-sleep 20000 --cyclic-dist 1000 \

--class vm --all 1 -t 60s

..the above invokes all the vm class of stressors to run all at the same time (with just one instance of each stressor) for 60 seconds.

The --cyclic-method specifies the delay used on each of the 10,000 cyclic iterations used. The default (and recommended method) is clock_ns, using the high precision delay. The available cyclic delay methods are:

- clock_ns (use the clock_nanosecond() sleep)

- posix_ns (use the POSIX nanosecond() sleep)

- itimer (use a high precision clock timer and pause to wait for a signal to measure latency)

- poll (busy spin-wait on clock_gettime() to eat cycles for a delay.

I hope this is plenty of cyclic measurement functionality to get some useful latency benchmarks against various kernel components when using some or a mix of the stress-ng stressors. Let me know if I am missing some other cyclic measurement options and I can see if I can add them in.

Keep stressing and measuring those systems!