Whenever a process accesses a virtual address where there isn't currently a physical page mapped into its process space then a page fault occurs. This causes an interrupt so that the kernel can handle the page fault.

A minor page fault occurs when the kernel can successfully map a physically resident page for the faulted user-space virtual address (for example, accessing a memory resident page that is already shared by other processes). Major page faults occur when accessing a page that has been swapped out or accessing a file backed memory mapped page that is not resident in memory.

Page faults incur latency in the running of a program, major faults especially so because of the delay of loading pages in from a storage device.

The faultstat tool allows one to easily monitor page fault activity allowing one to find the most active page faulting processes. Running faultstat with no options will dump the page fault statistics of all processes sorted in major+minor page fault order.

Faultstat also has a "top" like mode, inoking it with the -T option will display the top page faulting processes again in major+minor page fault order.

The Major and Minor columns show the respective major and minor page faults. The +Major and +Minor columns show the recent increase of page faults. The Swap column shows the swap size of the process in pages.

Pressing the 's' key will switch through the sort order. Pressing the 'a' key will add an arrow annotation showing page fault growth change. The 't' key will toggle between cumulative major/minor page total to current change in major/minor faults.

The faultstat tool has just landed in Ubuntu Eoan and can also be installed as a snap. The source can is available on github.

Showing posts with label tools. Show all posts

Showing posts with label tools. Show all posts

Tuesday, 13 August 2019

Saturday, 8 June 2019

Working towards stress-ng 0.10.00

Over the past 9+ months I've been cleaning up stress-ng in preparation for a V0.10.00 release. Stress-ng is a portable Linux/UNIX Swiss army knife of micro-benchmarking kernel stress tests.

Over the past 9+ months I've been cleaning up stress-ng in preparation for a V0.10.00 release. Stress-ng is a portable Linux/UNIX Swiss army knife of micro-benchmarking kernel stress tests.The Ubuntu kernel team uses stress-ng for kernel regression testing in several ways:

- Checking that the kernel does not crash when being stressed tested

- Performance (bogo-op throughput) regression checks

- Power consumption regression checks

- Core CPU Thermal regression checks

I've tried to focus on several aspects of stress-ng over the last last development cycle:

- Improve per-stressor modularization. A lot of code has been moved from the core of stress-ng back into each stress test.

- Clean up a lot of corner case bugs found when we've been testing stress-ng in production. We exercise stress-ng on a lot of hardware and in various cloud instances, so we find occasional bugs in stress-ng.

- Improve usability, for example, adding bash command completion.

- Improve portability (various kernels, compilers and C libraries). It really builds on runs on a *lot* of Linux/UNIX/POSIX systems.

- Improve kernel test coverage. Try to exercise more kernel core functionality and reach parts other tests don't yet reach.

With the use of gcov + lcov, I can observe where stress-ng is not currently exercising the kernel and this allows me to devise stress tests to touch these un-exercised parts. The tool has a history of tripping kernel bugs, so I'm quite pleased it has helped us to find corners of the kernel that needed improving.

This week I released V0.09.59 of stress-ng. Apart from the usual sets of clean up changes and bug fixes, this new release now incorporates bash command line completion to make it easier to use. Once the 5.2 Linux kernel has been released and I'm satisfied that stress-ng covers new 5.2 features I will probably be releasing V0.10.00. This will be a major release milestone now that stress-ng has realized most of my original design goals.

Thursday, 22 November 2018

High-level tracing with bpftrace

Bpftrace is a new high-level tracing language for Linux using the extended Berkeley packet filter (eBPF). It is a very powerful and flexible tracing front-end that enables systems to be analyzed much like DTrace.

The bpftrace tool is now installable as a snap. From the command line one can install it and enable it to use system tracing as follows:

To illustrate the power of bpftrace, here are some simple one-liners:

Note that it is recommended to use bpftrace with Linux 4.9 or higher.

The bpftrace github project page has an excellent README guide with some worked examples and is a very good place to start. There is also a very useful reference guide and one-liner tutorial too.

If you have any useful btftrace one-liners, it would be great to share them. This is an amazingly powerful tool, and it would be interesting to see how it will be used.

The bpftrace tool is now installable as a snap. From the command line one can install it and enable it to use system tracing as follows:

sudo snap install bpftrace

sudo snap connect bpftrace:system-trace

To illustrate the power of bpftrace, here are some simple one-liners:

# trace openat() system calls

sudo bpftrace -e 'tracepoint:syscalls:sys_enter_openat { printf("%d %s %s\n", pid, comm, str(args->filename)); }'

Attaching 1 probe...

1080 irqbalance /proc/interrupts

1080 irqbalance /proc/stat

2255 dmesg /etc/ld.so.cache

2255 dmesg /lib/x86_64-linux-gnu/libtinfo.so.5

2255 dmesg /lib/x86_64-linux-gnu/librt.so.1

2255 dmesg /lib/x86_64-linux-gnu/libc.so.6

2255 dmesg /lib/x86_64-linux-gnu/libpthread.so.0

2255 dmesg /usr/lib/locale/locale-archive

2255 dmesg /lib/terminfo/l/linux

2255 dmesg /home/king/.config/terminal-colors.d

2255 dmesg /etc/terminal-colors.d

2255 dmesg /dev/kmsg

2255 dmesg /usr/lib/x86_64-linux-gnu/gconv/gconv-modules.cache

# count system calls using tracepoints:

sudo bpftrace -e 'tracepoint:syscalls:sys_enter_* { @[probe] = count(); }'

@[tracepoint:syscalls:sys_enter_getsockname]: 1

@[tracepoint:syscalls:sys_enter_kill]: 1

@[tracepoint:syscalls:sys_enter_prctl]: 1

@[tracepoint:syscalls:sys_enter_epoll_wait]: 1

@[tracepoint:syscalls:sys_enter_signalfd4]: 2

@[tracepoint:syscalls:sys_enter_utimensat]: 2

@[tracepoint:syscalls:sys_enter_set_robust_list]: 2

@[tracepoint:syscalls:sys_enter_poll]: 2

@[tracepoint:syscalls:sys_enter_socket]: 3

@[tracepoint:syscalls:sys_enter_getrandom]: 3

@[tracepoint:syscalls:sys_enter_setsockopt]: 3

...

Note that it is recommended to use bpftrace with Linux 4.9 or higher.

The bpftrace github project page has an excellent README guide with some worked examples and is a very good place to start. There is also a very useful reference guide and one-liner tutorial too.

If you have any useful btftrace one-liners, it would be great to share them. This is an amazingly powerful tool, and it would be interesting to see how it will be used.

Friday, 1 September 2017

Static analysis on the Linux kernel

There are a wealth of powerful static analysis tools available nowadays for analyzing C source code. These tools help to find bugs in code by just analyzing the source code without actually having to execute the code. Over that past year or so I have been running the following static analysis tools on linux-next every weekday to find kernel bugs:

At the end of each run, the output from the previous run is diff'd against the new output and generates a list of new and fixed issues. I then manually wade through these and try to fix some of the low hanging fruit when I can find free time to do so.

I've been gathering statistics from the CoverityScan builds for the past 12 months tracking the number of defects found, outstanding issues and number of defects eliminated:

As one can see, there are a lot of defects getting fixed by the Linux developers and the overall trend of outstanding issues is downwards, which is good to see. The defect rate in linux-next is currently 0.46 issues per 1000 lines (out of over 13 million lines that are being scanned). A typical defect rate for a project this size is 0.5 issues per 1000 lines. Some of these issues are false positives or very minor / insignficant issues that will not cause any run time issues at all, so don't be too alarmed by the statistics.

Using a range of static analysis tools is useful because each one has it's own strengths and weaknesses. For example smatch and sparse are designed for sanity checking the kernel source, so they have some smarts that detect kernel specific semantic issues. CoverityScan is a commercial product however they allow open source projects the size of the linux-kernel to be built daily and the web based bug tracking tool is very easy to use and CoverityScan does manage to reliably find bugs that other tools can't reach. Cppcheck is useful as scans all the code paths by forcibly trying all the #ifdef'd variations of code - which is useful on the more obscure CONFIG mixes.

Finally, I use clang's scan-build and the latest verion of gcc to try and find the more typical warnings found by the static analysis built into modern open source compilers.

The more typical issues being found by static analysis are ones that don't generally appear at run time, such as in corner cases like error handling code paths, resource leaks or resource failure conditions, uninitialized variables or dead code paths.

My intention is to continue this process of daily checking and I hope to report back next September to review the CoverityScan trends for another year.

- cppcheck

- smatch

- sparse

- clang scan-build

- CoverityScan

- The latest gcc

At the end of each run, the output from the previous run is diff'd against the new output and generates a list of new and fixed issues. I then manually wade through these and try to fix some of the low hanging fruit when I can find free time to do so.

I've been gathering statistics from the CoverityScan builds for the past 12 months tracking the number of defects found, outstanding issues and number of defects eliminated:

As one can see, there are a lot of defects getting fixed by the Linux developers and the overall trend of outstanding issues is downwards, which is good to see. The defect rate in linux-next is currently 0.46 issues per 1000 lines (out of over 13 million lines that are being scanned). A typical defect rate for a project this size is 0.5 issues per 1000 lines. Some of these issues are false positives or very minor / insignficant issues that will not cause any run time issues at all, so don't be too alarmed by the statistics.

Using a range of static analysis tools is useful because each one has it's own strengths and weaknesses. For example smatch and sparse are designed for sanity checking the kernel source, so they have some smarts that detect kernel specific semantic issues. CoverityScan is a commercial product however they allow open source projects the size of the linux-kernel to be built daily and the web based bug tracking tool is very easy to use and CoverityScan does manage to reliably find bugs that other tools can't reach. Cppcheck is useful as scans all the code paths by forcibly trying all the #ifdef'd variations of code - which is useful on the more obscure CONFIG mixes.

Finally, I use clang's scan-build and the latest verion of gcc to try and find the more typical warnings found by the static analysis built into modern open source compilers.

The more typical issues being found by static analysis are ones that don't generally appear at run time, such as in corner cases like error handling code paths, resource leaks or resource failure conditions, uninitialized variables or dead code paths.

My intention is to continue this process of daily checking and I hope to report back next September to review the CoverityScan trends for another year.

Tuesday, 18 July 2017

New features landing stress-ng V0.08.09

The latest release of stress-ng V0.08.09 incorporates new stressors and a handful of bug fixes. So what is new in this release?

...the memrate stressor will attempt to limit the memory rates but due to scheduling jitter and other memory activity it may not be 100% accurate. By careful setting of the size of the memory being exercised with the --memrate-bytes option one can exercise the L1/L2/L3 caches and/or the entire memory.

By default, matrix stressor will perform matrix operations with optimal memory access to memory. The new --matrix-yx option will instead perform matrix operations in a y, x rather than an x, y matrix order, causing more cache stalls on larger matrices. This can be useful for exercising cache misses.

To complement the heapsort, mergesort and qsort memory/CPU exercising sort stressors I've added the BSD library radixsort stressor to exercise sorting of hundreds of thousands of small text strings.

Finally, while exercising various hugepage kernel configuration options I was inspired to make stress-ng mmap's to work better with hugepage madvise hints, so where possible all anonymous memory mappings are now private to allow hugepage madvise to work. The stream and vm stressors also have new madvise options to allow one to chose hugepage, nohugepage or normal hints.

No big changes as per normal, just small incremental improvements to this all purpose stress tool.

- memrate stressor to exercise and measure memory read/write throughput

- matrix yx option to swap order of matrix operations

- matrix stressor size can now be 8192 x 8192 in size

- radixsort stressor (using the BSD library radixsort) to exercise CPU and memory

- improved job script parsing and error reporting

- faster termination of rmap stressor (this was slow inside VMs)

- icache stressor now calls cacheflush()

- anonymous memory mappings are now private allowing hugepage madvise

- fcntl stressor exercises the 4.13 kernel F_GET_FILE_RW_HINT and F_SET_FILE_RW_HINT

- stream and vm stressors have new mdavise options

stress-ng --memrate 1 --memrate-bytes 1G \

--memrate-rd-mbs 1000 --memrate-wr-mbs 2000 -t 60

stress-ng: info: [22880] dispatching hogs: 1 memrate

stress-ng: info: [22881] stress-ng-memrate: write64: 1998.96 MB/sec

stress-ng: info: [22881] stress-ng-memrate: read64: 998.61 MB/sec

stress-ng: info: [22881] stress-ng-memrate: write32: 1999.68 MB/sec

stress-ng: info: [22881] stress-ng-memrate: read32: 998.80 MB/sec

stress-ng: info: [22881] stress-ng-memrate: write16: 1999.39 MB/sec

stress-ng: info: [22881] stress-ng-memrate: read16: 999.66 MB/sec

stress-ng: info: [22881] stress-ng-memrate: write8: 1841.04 MB/sec

stress-ng: info: [22881] stress-ng-memrate: read8: 999.94 MB/sec

stress-ng: info: [22880] successful run completed in 60.00s (1 min, 0.00 secs)

...the memrate stressor will attempt to limit the memory rates but due to scheduling jitter and other memory activity it may not be 100% accurate. By careful setting of the size of the memory being exercised with the --memrate-bytes option one can exercise the L1/L2/L3 caches and/or the entire memory.

By default, matrix stressor will perform matrix operations with optimal memory access to memory. The new --matrix-yx option will instead perform matrix operations in a y, x rather than an x, y matrix order, causing more cache stalls on larger matrices. This can be useful for exercising cache misses.

To complement the heapsort, mergesort and qsort memory/CPU exercising sort stressors I've added the BSD library radixsort stressor to exercise sorting of hundreds of thousands of small text strings.

Finally, while exercising various hugepage kernel configuration options I was inspired to make stress-ng mmap's to work better with hugepage madvise hints, so where possible all anonymous memory mappings are now private to allow hugepage madvise to work. The stream and vm stressors also have new madvise options to allow one to chose hugepage, nohugepage or normal hints.

No big changes as per normal, just small incremental improvements to this all purpose stress tool.

Tuesday, 27 June 2017

New features in forkstat V0.02.00

|

| The forkstat mascot |

- STAT_PTRC - ptrace attach/detach events

- STAT_UID - UID (and GID) change events

- STAT_SID - SID change events

The following example shows fortstat being used to detect when a process is being traced using ptrace:

sudo ./forkstat -x -e ptrce

Time Event PID UID TTY Info Duration Process

11:42:31 ptrce 17376 0 pts/15 attach strace -p 17350

11:42:31 ptrce 17350 1000 pts/13 attach top

11:42:37 ptrce 17350 1000 pts/13 detach

Process 17376 runs strace on process 17350 (top). We can see the ptrace attach event on the process and also then a few seconds later the detach event. We can see that the strace was being run from pts/15 by root. Using forkstat we can now snoop on users who are snooping on other user's processes.

I use forkstat mainly to capture busy process fork/exec/exit activity that tools such as ps and top cannot see because of the very sort duration of some processes or threads. Sometimes processes are created rapidly that one needs to run forkstat with a high priority to capture all the events, and so the new -r option will run forkstat with a high real time scheduling priority to try and capture all the events.

These new features landed in forkstat V0.02.00 for Ubuntu 17.10 Aardvark.

Thursday, 22 June 2017

Cyclic latency measurements in stress-ng V0.08.06

|

| The stress-ng logo |

The cyclic test can be configured to specify the sleep time (in nanoseconds), the scheduling type (rr or fifo), the scheduling priority (1 to 100) and also the sleep method (explained later).

The first 10,000 latency measurements are used to compute various latency statistics:

- mean latency (aka the 'average')

- modal latency (the most 'popular' latency)

- minimum latency

- maximum latency

- standard deviation

- latency percentiles (25%, 50%, 75%, 90%, 95.40%, 99.0%, 99.5%, 99.9% and 99.99%

- latency distribution (enabled with the --cyclic-dist option)

The latency distribution is shown when the --cyclic-dist option is used; one has to specify the distribution interval in nanoseconds and up to the first 100 values in the distribution are output.

For an idle machine, one can invoke just the cyclic measurements with stress-ng as follows:

sudo stress-ng --cyclic 1 --cyclic-policy fifo \

--cyclic-prio 100 --cyclic-method --clock_ns \

--cyclic-sleep 20000 --cyclic-dist 1000 -t 5

stress-ng: info: [27594] dispatching hogs: 1 cyclic

stress-ng: info: [27595] stress-ng-cyclic: sched SCHED_FIFO: 20000 ns delay, 10000 samples

stress-ng: info: [27595] stress-ng-cyclic: mean: 5242.86 ns, mode: 4880 ns

stress-ng: info: [27595] stress-ng-cyclic: min: 3050 ns, max: 44818 ns, std.dev. 1142.92

stress-ng: info: [27595] stress-ng-cyclic: latency percentiles:

stress-ng: info: [27595] stress-ng-cyclic: 25.00%: 4881 us

stress-ng: info: [27595] stress-ng-cyclic: 50.00%: 5191 us

stress-ng: info: [27595] stress-ng-cyclic: 75.00%: 5261 us

stress-ng: info: [27595] stress-ng-cyclic: 90.00%: 5368 us

stress-ng: info: [27595] stress-ng-cyclic: 95.40%: 6857 us

stress-ng: info: [27595] stress-ng-cyclic: 99.00%: 8942 us

stress-ng: info: [27595] stress-ng-cyclic: 99.50%: 9821 us

stress-ng: info: [27595] stress-ng-cyclic: 99.90%: 22210 us

stress-ng: info: [27595] stress-ng-cyclic: 99.99%: 36074 us

stress-ng: info: [27595] stress-ng-cyclic: latency distribution (1000 us intervals):

stress-ng: info: [27595] stress-ng-cyclic: latency (us) frequency

stress-ng: info: [27595] stress-ng-cyclic: 0 0

stress-ng: info: [27595] stress-ng-cyclic: 1000 0

stress-ng: info: [27595] stress-ng-cyclic: 2000 0

stress-ng: info: [27595] stress-ng-cyclic: 3000 82

stress-ng: info: [27595] stress-ng-cyclic: 4000 3342

stress-ng: info: [27595] stress-ng-cyclic: 5000 5974

stress-ng: info: [27595] stress-ng-cyclic: 6000 197

stress-ng: info: [27595] stress-ng-cyclic: 7000 209

stress-ng: info: [27595] stress-ng-cyclic: 8000 100

stress-ng: info: [27595] stress-ng-cyclic: 9000 50

stress-ng: info: [27595] stress-ng-cyclic: 10000 10

stress-ng: info: [27595] stress-ng-cyclic: 11000 9

stress-ng: info: [27595] stress-ng-cyclic: 12000 2

stress-ng: info: [27595] stress-ng-cyclic: 13000 2

stress-ng: info: [27595] stress-ng-cyclic: 14000 1

stress-ng: info: [27595] stress-ng-cyclic: 15000 9

stress-ng: info: [27595] stress-ng-cyclic: 16000 1

stress-ng: info: [27595] stress-ng-cyclic: 17000 1

stress-ng: info: [27595] stress-ng-cyclic: 18000 0

stress-ng: info: [27595] stress-ng-cyclic: 19000 0

stress-ng: info: [27595] stress-ng-cyclic: 20000 0

stress-ng: info: [27595] stress-ng-cyclic: 21000 1

stress-ng: info: [27595] stress-ng-cyclic: 22000 1

stress-ng: info: [27595] stress-ng-cyclic: 23000 0

stress-ng: info: [27595] stress-ng-cyclic: 24000 1

stress-ng: info: [27595] stress-ng-cyclic: 25000 2

stress-ng: info: [27595] stress-ng-cyclic: 26000 0

stress-ng: info: [27595] stress-ng-cyclic: 27000 1

stress-ng: info: [27595] stress-ng-cyclic: 28000 1

stress-ng: info: [27595] stress-ng-cyclic: 29000 2

stress-ng: info: [27595] stress-ng-cyclic: 30000 0

stress-ng: info: [27595] stress-ng-cyclic: 31000 0

stress-ng: info: [27595] stress-ng-cyclic: 32000 0

stress-ng: info: [27595] stress-ng-cyclic: 33000 0

stress-ng: info: [27595] stress-ng-cyclic: 34000 0

stress-ng: info: [27595] stress-ng-cyclic: 35000 0

stress-ng: info: [27595] stress-ng-cyclic: 36000 1

stress-ng: info: [27595] stress-ng-cyclic: 37000 0

stress-ng: info: [27595] stress-ng-cyclic: 38000 0

stress-ng: info: [27595] stress-ng-cyclic: 39000 0

stress-ng: info: [27595] stress-ng-cyclic: 40000 0

stress-ng: info: [27595] stress-ng-cyclic: 41000 0

stress-ng: info: [27595] stress-ng-cyclic: 42000 0

stress-ng: info: [27595] stress-ng-cyclic: 43000 0

stress-ng: info: [27595] stress-ng-cyclic: 44000 1

stress-ng: info: [27594] successful run completed in 5.00s

Note that stress-ng needs to be invoked using sudo to enable the Real Time FIFO scheduling for the cyclic measurements.

The above example uses the following options:

- --cyclic 1

- starts one instance of the cyclic measurements (1 is always recommended)

- --cyclic-policy fifo

- use the real time First-In-First-Out scheduling for the cyclic measurements

- --cyclic-prio 100

- use the maximum scheduling priority

- --cyclic-method clock_ns

- use the clock_nanoseconds(2) system call to perform the high precision duration sleep

- --cyclic-sleep 20000

- sleep for 20000 nanoseconds per cyclic iteration

- --cyclic-dist 1000

- enable latency distribution statistics with an interval of 1000 nanoseconds between each data point.

- -t 5

- run for just 5 seconds

Now for the interesting part. Since stress-ng is packed with many different stressors we can run these while performing the cyclic measurements, for example, we can tell stress-ng to run *all* the virtual memory related stress tests and see how this affects the latency distribution using the following:

sudo stress-ng --cyclic 1 --cyclic-policy fifo \

--cyclic-prio 100 --cyclic-method clock_ns \

--cyclic-sleep 20000 --cyclic-dist 1000 \

--class vm --all 1 -t 60s

..the above invokes all the vm class of stressors to run all at the same time (with just one instance of each stressor) for 60 seconds.

The --cyclic-method specifies the delay used on each of the 10,000 cyclic iterations used. The default (and recommended method) is clock_ns, using the high precision delay. The available cyclic delay methods are:

- clock_ns (use the clock_nanosecond() sleep)

- posix_ns (use the POSIX nanosecond() sleep)

- itimer (use a high precision clock timer and pause to wait for a signal to measure latency)

- poll (busy spin-wait on clock_gettime() to eat cycles for a delay.

I hope this is plenty of cyclic measurement functionality to get some useful latency benchmarks against various kernel components when using some or a mix of the stress-ng stressors. Let me know if I am missing some other cyclic measurement options and I can see if I can add them in.

Keep stressing and measuring those systems!

Monday, 15 May 2017

Firmware Test Suite Text Based Front-End

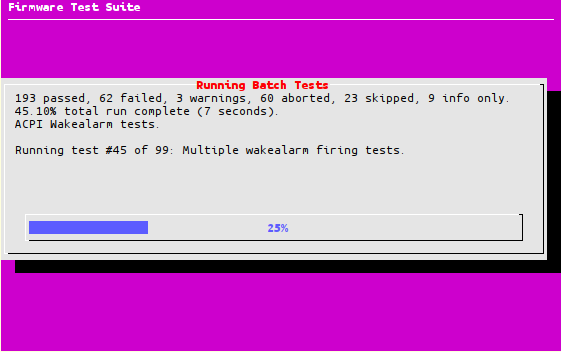

The Firmware Test Suite (FWTS) has an easy to use text based front-end that is primarily used by the FWTS Live-CD image but it can also be used in the Ubuntu terminal.

The Firmware Test Suite (FWTS) has an easy to use text based front-end that is primarily used by the FWTS Live-CD image but it can also be used in the Ubuntu terminal.To install and run the front-end use:

sudo apt-get install fwts-frontend

sudo fwts-frontend-text

..and one should see a menu of options:

In this demonstration, the "All Batch Tests" option has been selected:

Tests will be run one by one and a progress bar shows the progress of each test. Some tests run very quickly, others can take several minutes depending on the hardware configuration (such as number of processors).

Once the tests are all complete, the following dialogue box is displayed:

The test has saved several files into the directory /fwts/15052017/1748/ and selecting Yes one can view the results log in a scroll-box:

Exiting this, the FWTS frontend dialog is displayed:

Press enter to exit (note that the Poweroff option is just for the fwts Live-CD image version of fwts-frontend).

The tool dumps various logs, for example, the above run generated:

ls -alt /fwts/15052017/1748/

total 1388

drwxr-xr-x 5 root root 4096 May 15 18:09 ..

drwxr-xr-x 2 root root 4096 May 15 17:49 .

-rw-r--r-- 1 root root 358666 May 15 17:49 acpidump.log

-rw-r--r-- 1 root root 3808 May 15 17:49 cpuinfo.log

-rw-r--r-- 1 root root 22238 May 15 17:49 lspci.log

-rw-r--r-- 1 root root 19136 May 15 17:49 dmidecode.log

-rw-r--r-- 1 root root 79323 May 15 17:49 dmesg.log

-rw-r--r-- 1 root root 311 May 15 17:49 README.txt

-rw-r--r-- 1 root root 631370 May 15 17:49 results.html

-rw-r--r-- 1 root root 281371 May 15 17:49 results.log

acpidump.log is a dump of the ACPI tables in format compatible with the ACPICA acpidump tool. The results.log file is a copy of the results generated by FWTS and results.html is a HTML formatted version of the log.

Thursday, 5 January 2017

BCC: a powerful front end to extended Berkeley Packet Filters

The BPF Compiler Collection (BCC) is a toolkit for building kernel tracing tools that leverage the functionality provided by the Linux extended Berkeley Packet Filters (BPF).

BCC allows one to write BPF programs with front-ends in Python or Lua with kernel instrumentation written in C. The instrumentation code is built into sandboxed eBPF byte code and is executed in the kernel.

The BCC github project README file provides an excellent overview and description of BCC and the various available BCC tools. Building BCC from scratch can be a bit time consuming, however, the good news is that the BCC tools are now available as a snap and so BCC can be quickly and easily installed just using:

There are currently over 50 BCC tools in the snap, so let's have a quick look at a few:

cachetop allows one to view the top page cache hit/miss statistics. To run this use:

The funccount tool allows one to count the number of times specific functions get called. For example, to see how many kernel functions with the name starting with "do_" get called per second one can use:

To see how to use all the options in this tool, use the -h option:

I've found the funccount tool to be especially useful to check on kernel activity by checking on hits on specific function names.

The slabratetop tool is useful to see the active kernel SLAB/SLUB memory allocation rates:

If you want to see which process is opening specific files, one can snoop on open system calls use the opensnoop tool:

Hopefully this will give you a taste of the useful tools that are available in BCC (I have barely scratched the surface in this article). I recommend installing the snap and giving it a try.

As it stands,BCC provides a useful mechanism to develop BPF tracing tools and I look forward to regularly updating the BCC snap as more tools are added to BCC. Kudos to Brendan Gregg for BCC!

BCC allows one to write BPF programs with front-ends in Python or Lua with kernel instrumentation written in C. The instrumentation code is built into sandboxed eBPF byte code and is executed in the kernel.

The BCC github project README file provides an excellent overview and description of BCC and the various available BCC tools. Building BCC from scratch can be a bit time consuming, however, the good news is that the BCC tools are now available as a snap and so BCC can be quickly and easily installed just using:

sudo snap install --devmode bcc

There are currently over 50 BCC tools in the snap, so let's have a quick look at a few:

cachetop allows one to view the top page cache hit/miss statistics. To run this use:

sudo bcc.cachetop

The funccount tool allows one to count the number of times specific functions get called. For example, to see how many kernel functions with the name starting with "do_" get called per second one can use:

sudo bcc.funccount "do_*" -i 1

To see how to use all the options in this tool, use the -h option:

sudo bcc.funccount -h

I've found the funccount tool to be especially useful to check on kernel activity by checking on hits on specific function names.

The slabratetop tool is useful to see the active kernel SLAB/SLUB memory allocation rates:

sudo bcc.slabratetop

If you want to see which process is opening specific files, one can snoop on open system calls use the opensnoop tool:

sudo bcc.opensnoop -T

Hopefully this will give you a taste of the useful tools that are available in BCC (I have barely scratched the surface in this article). I recommend installing the snap and giving it a try.

As it stands,BCC provides a useful mechanism to develop BPF tracing tools and I look forward to regularly updating the BCC snap as more tools are added to BCC. Kudos to Brendan Gregg for BCC!

Wednesday, 4 May 2016

Some extra features landing in stress-ng 0.06.00

Recently I've been adding a few more features into stress-ng to get improve kernel code coverage. I'm currently using a kernel built with gcov enabled and using the most excellent lcov tool to collate the coverage data and produce some rather useful coverage charts.

With a gcov enabled kernel, gathering coverage stats is a trivial process with lcov:

..and the html output appears in the html directory.

In the latest 0.06.00 release of stress-ng, the following new features have been introduced:

..thanks to Jim Rowan (Intel) for the CPU affinity ideas.

stress-ng 0.06.00 will be landing in Ubunty Yakkety soon, and also in my power utilities PPA ppa:colin-king/white

With a gcov enabled kernel, gathering coverage stats is a trivial process with lcov:

sudo apt-get install lcov

sudo lcov --zerocounters

stress-ng --seq 0 -t 60

sudo lcov -c -o kernel.info

sudo genhtml -o html kernel.info

..and the html output appears in the html directory.

In the latest 0.06.00 release of stress-ng, the following new features have been introduced:

- af-alg stressor, added skciphers and rngs

- new Translation Lookaside Buffer (TLB) shootdown stressor

- new /dev/full stressor

- hdd stressor now works through all the different hdd options if --maximize is used

- wider procfs stressing

- added more keyctl commands to the key stressor

- new msync stressor, exercise msync of mmap'd memory back to file and from file back to memory.

- Real Time Clock (RTC) stressor (via /dev/rtc and /proc/driver/rtc)

- taskset option, allowing one to run stressors on specific CPUs (affinity setting)

- inotify stressor now also exercises the FIONREAD ioctl()

- and some bug fixes found when testing stress-ng on various architectures.

stress-ng --taskset 1,3,5-7 --cpu 5

..thanks to Jim Rowan (Intel) for the CPU affinity ideas.

stress-ng 0.06.00 will be landing in Ubunty Yakkety soon, and also in my power utilities PPA ppa:colin-king/white

Monday, 15 February 2016

New "top" mode in eventstat

I wrote eventstat a few years ago to track wakeup events that keep a machine from being fully idle. For Ubuntu Xenial Xerus 16.04 I've added a 'top' like mode (enabled using the -T option).

By widening the terminal one can see more of the Task, Init Function and Callback text, which is useful as these details can be rather lengthy.

Anyhow, just a minor feature change, but hopefully a useful one.

By widening the terminal one can see more of the Task, Init Function and Callback text, which is useful as these details can be rather lengthy.

Anyhow, just a minor feature change, but hopefully a useful one.

Thursday, 4 February 2016

Intel Platform Quality of Service and Cache Allocation Technology

One issue when running parallel processes is contention of shared resources such as the Last Level Cache (aka LLC or L3 Cache). For example, a server may be running a set of Virtual Machines with processes that are memory and cache intensive hence producing a large amount of cache activity. This can impact on the other VMs and is known as the "Noisy Neighbour" problem.

Fortunately the next generation Intel processors allow one to monitor and also fine tune cache allocation using Intel Cache Monitoring Technology (CMT) and Cache Allocation Technology (CAT).

Intel kindly loaned me a 12 thread development machine with CMT and CAT support to experiment with this technology using the Intel pqos tool. For my experiment, I installed Ubuntu Xenial Server on the machine. I then installed KVM and an VM instance of Ubuntu Xenial Server. I then loaded the instance using stress-ng running a memory bandwidth stressor:

Using pqos, one can monitor and see the cache/memory activity:

In my experiment, I want to create 2 COS types, the first COS will have just 1 cache way assigned to it and CPU 0 will be bound to this COS as well as pinning the VM instance to CPU 0 The second COS will have the other 11 cache ways assigned to it, and all the other CPUs can use this COS.

So, create COS #1 with just 1 way of cache, and bind CPU 0 to this COS, and pin the VM to CPU 0:

Now re-run the stream stressor and see if the VM has less impact on the LL3 cache:

This is a relatively simple example. With the ability to monitor cache and memory bandwidth activity with one can carefully tune a system to make best use of the limited LL3 cache resource and maximise throughput where needed.

There are many applications where Intel CMT/CAT can be useful, for example fine tuning containers or VM instances, or pinning user space networking buffers to cache ways in DPDK for improved throughput.

Fortunately the next generation Intel processors allow one to monitor and also fine tune cache allocation using Intel Cache Monitoring Technology (CMT) and Cache Allocation Technology (CAT).

Intel kindly loaned me a 12 thread development machine with CMT and CAT support to experiment with this technology using the Intel pqos tool. For my experiment, I installed Ubuntu Xenial Server on the machine. I then installed KVM and an VM instance of Ubuntu Xenial Server. I then loaded the instance using stress-ng running a memory bandwidth stressor:

stress-ng --stream 1 -v --stream-l3-size 16M

Using pqos, one can monitor and see the cache/memory activity:

sudo apt-get install intel-cmt-cat

sudo modprobe msr

sudo pqos -r

TIME 2016-02-04 10:25:06

CORE IPC MISSES LLC[KB] MBL[MB/s] MBR[MB/s]

0 0.59 168259k 9144.0 12195.0 0.0

1 1.33 107k 0.0 3.3 0.0

2 0.20 2k 0.0 0.0 0.0

3 0.70 104k 0.0 2.0 0.0

4 0.86 23k 0.0 0.7 0.0

5 0.38 42k 24.0 1.5 0.0

6 0.12 2k 0.0 0.0 0.0

7 0.24 48k 0.0 3.0 0.0

8 0.61 26k 0.0 1.6 0.0

9 0.37 11k 144.0 0.9 0.0

10 0.48 1k 0.0 0.0 0.0

11 0.45 2k 0.0 0.0 0.0

stress-ng --stream 4 --stream-l3-size 2M --perf --metrics-brief -t 60

stress-ng: info: [2195] dispatching hogs: 4 stream

stress-ng: info: [2196] stress-ng-stream: stressor loosely based on a variant of the STREAM benchmark code

stress-ng: info: [2196] stress-ng-stream: do NOT submit any of these results to the STREAM benchmark results

stress-ng: info: [2196] stress-ng-stream: Using L3 CPU cache size of 2048K

stress-ng: info: [2196] stress-ng-stream: memory rate: 1842.22 MB/sec, 736.89 Mflop/sec (instance 0)

stress-ng: info: [2198] stress-ng-stream: memory rate: 1847.88 MB/sec, 739.15 Mflop/sec (instance 2)

stress-ng: info: [2199] stress-ng-stream: memory rate: 1833.89 MB/sec, 733.56 Mflop/sec (instance 3)

stress-ng: info: [2197] stress-ng-stream: memory rate: 1847.16 MB/sec, 738.86 Mflop/sec (instance 1)

stress-ng: info: [2195] successful run completed in 60.01s (1 min, 0.01 secs)

stress-ng: info: [2195] stressor bogo ops real time usr time sys time bogo ops/s bogo ops/s

stress-ng: info: [2195] (secs) (secs) (secs) (real time) (usr+sys time)

stress-ng: info: [2195] stream 22101 60.01 239.93 0.04 368.31 92.10

stress-ng: info: [2195] stream:

stress-ng: info: [2195] 547,520,600,744 CPU Cycles 9.12 B/sec

stress-ng: info: [2195] 69,959,954,760 Instructions 1.17 B/sec (0.128 instr. per cycle)

stress-ng: info: [2195] 11,066,905,620 Cache References 0.18 B/sec

stress-ng: info: [2195] 11,065,068,064 Cache Misses 0.18 B/sec (99.98%)

stress-ng: info: [2195] 8,759,154,716 Branch Instructions 0.15 B/sec

stress-ng: info: [2195] 2,205,904 Branch Misses 36.76 K/sec ( 0.03%)

stress-ng: info: [2195] 23,856,890,232 Bus Cycles 0.40 B/sec

stress-ng: info: [2195] 477,143,689,444 Total Cycles 7.95 B/sec

stress-ng: info: [2195] 36 Page Faults Minor 0.60 sec

stress-ng: info: [2195] 0 Page Faults Major 0.00 sec

stress-ng: info: [2195] 96 Context Switches 1.60 sec

stress-ng: info: [2195] 0 CPU Migrations 0.00 sec

stress-ng: info: [2195] 0 Alignment Faults 0.00 sec

sudo pqos -r

TIME 2016-02-04 10:35:27

CORE IPC MISSES LLC[KB] MBL[MB/s] MBR[MB/s]

0 0.14 43060k 1104.0 2487.9 0.0

1 0.12 3981523k 2616.0 2893.8 0.0

2 0.26 320k 48.0 18.0 0.0

3 0.12 3980489k 1800.0 2572.2 0.0

4 0.12 3979094k 1728.0 2870.3 0.0

5 0.12 3970996k 2112.0 2734.5 0.0

6 0.04 20k 0.0 0.3 0.0

7 0.04 29k 0.0 1.9 0.0

8 0.09 143k 0.0 5.9 0.0

9 0.15 0k 0.0 0.0 0.0

10 0.07 2k 0.0 0.0 0.0

11 0.13 0k 0.0 0.0 0.0

sudo pqos -v

NOTE: Mixed use of MSR and kernel interfaces to manage

CAT or CMT & MBM may lead to unexpected behavior.

INFO: Monitoring capability detected

INFO: CPUID.0x7.0: CAT supported

INFO: CAT details: CDP support=0, CDP on=0, #COS=16, #ways=12, ways contention bit-mask 0xc00

INFO: LLC cache size 9437184 bytes, 12 ways

INFO: LLC cache way size 786432 bytes

INFO: L3CA capability detected

INFO: Detected PID API (perf) support for LLC Occupancy

INFO: Detected PID API (perf) support for Instructions/Cycle

INFO: Detected PID API (perf) support for LLC Misses

ERROR: IPC and/or LLC miss performance counters already in use!

Use -r option to start monitoring anyway.

Monitoring start error on core(s) 5, status 6

In my experiment, I want to create 2 COS types, the first COS will have just 1 cache way assigned to it and CPU 0 will be bound to this COS as well as pinning the VM instance to CPU 0 The second COS will have the other 11 cache ways assigned to it, and all the other CPUs can use this COS.

So, create COS #1 with just 1 way of cache, and bind CPU 0 to this COS, and pin the VM to CPU 0:

sudo pqos -e llc:1=0x0001

sudo pqos -a llc:1=0

sudo taskset -apc 0 $(pidof qemu-system-x86_64)

sudo pqos -e "llc:2=0x0ffe"

sudo pqos -a "llc:2=1-11"

sudo pqos -s

NOTE: Mixed use of MSR and kernel interfaces to manage

CAT or CMT & MBM may lead to unexpected behavior.

L3CA COS definitions for Socket 0:

L3CA COS0 => MASK 0xfff

L3CA COS1 => MASK 0x1

L3CA COS2 => MASK 0xffe

L3CA COS3 => MASK 0xfff

L3CA COS4 => MASK 0xfff

L3CA COS5 => MASK 0xfff

L3CA COS6 => MASK 0xfff

L3CA COS7 => MASK 0xfff

L3CA COS8 => MASK 0xfff

L3CA COS9 => MASK 0xfff

L3CA COS10 => MASK 0xfff

L3CA COS11 => MASK 0xfff

L3CA COS12 => MASK 0xfff

L3CA COS13 => MASK 0xfff

L3CA COS14 => MASK 0xfff

L3CA COS15 => MASK 0xfff

Core information for socket 0:

Core 0 => COS1, RMID0

Core 1 => COS2, RMID0

Core 2 => COS2, RMID0

Core 3 => COS2, RMID0

Core 4 => COS2, RMID0

Core 5 => COS2, RMID0

Core 6 => COS2, RMID0

Core 7 => COS2, RMID0

Core 8 => COS2, RMID0

Core 9 => COS2, RMID0

Core 10 => COS2, RMID0

Core 11 => COS2, RMID0

Now re-run the stream stressor and see if the VM has less impact on the LL3 cache:

stress-ng --stream 4 --stream-l3-size 1M --perf --metrics-brief -t 60

stress-ng: info: [2232] dispatching hogs: 4 stream

stress-ng: info: [2233] stress-ng-stream: stressor loosely based on a variant of the STREAM benchmark code

stress-ng: info: [2233] stress-ng-stream: do NOT submit any of these results to the STREAM benchmark results

stress-ng: info: [2233] stress-ng-stream: Using L3 CPU cache size of 1024K

stress-ng: info: [2235] stress-ng-stream: memory rate: 2616.90 MB/sec, 1046.76 Mflop/sec (instance 2)

stress-ng: info: [2233] stress-ng-stream: memory rate: 2562.97 MB/sec, 1025.19 Mflop/sec (instance 0)

stress-ng: info: [2234] stress-ng-stream: memory rate: 2541.10 MB/sec, 1016.44 Mflop/sec (instance 1)

stress-ng: info: [2236] stress-ng-stream: memory rate: 2652.02 MB/sec, 1060.81 Mflop/sec (instance 3)

stress-ng: info: [2232] successful run completed in 60.00s (1 min, 0.00 secs)

stress-ng: info: [2232] stressor bogo ops real time usr time sys time bogo ops/s bogo ops/s

stress-ng: info: [2232] (secs) (secs) (secs) (real time) (usr+sys time)

stress-ng: info: [2232] stream 62223 60.00 239.97 0.00 1037.01 259.29

stress-ng: info: [2232] stream:

stress-ng: info: [2232] 547,364,185,528 CPU Cycles 9.12 B/sec

stress-ng: info: [2232] 97,037,047,444 Instructions 1.62 B/sec (0.177 instr. per cycle)

stress-ng: info: [2232] 14,396,274,512 Cache References 0.24 B/sec

stress-ng: info: [2232] 14,390,808,440 Cache Misses 0.24 B/sec (99.96%)

stress-ng: info: [2232] 12,144,372,800 Branch Instructions 0.20 B/sec

stress-ng: info: [2232] 1,732,264 Branch Misses 28.87 K/sec ( 0.01%)

stress-ng: info: [2232] 23,856,388,872 Bus Cycles 0.40 B/sec

stress-ng: info: [2232] 477,136,188,248 Total Cycles 7.95 B/sec

stress-ng: info: [2232] 44 Page Faults Minor 0.73 sec

stress-ng: info: [2232] 0 Page Faults Major 0.00 sec

stress-ng: info: [2232] 72 Context Switches 1.20 sec

stress-ng: info: [2232] 0 CPU Migrations 0.00 sec

stress-ng: info: [2232] 0 Alignment Faults 0.00 sec

This is a relatively simple example. With the ability to monitor cache and memory bandwidth activity with one can carefully tune a system to make best use of the limited LL3 cache resource and maximise throughput where needed.

There are many applications where Intel CMT/CAT can be useful, for example fine tuning containers or VM instances, or pinning user space networking buffers to cache ways in DPDK for improved throughput.

Sunday, 31 January 2016

Pagemon improvements

Over the past month I've been finding the odd moments [1] to add some small improvements and fix a few bugs to pagemon (a tool to monitor process memory). The original code went from a sketchy proof of concept prototype to a somewhat more usable tool in a few weeks, so my main concern recently was to clean up the code and make it more efficient.

With the use of tools such as valgrind's cachegrind and perf I was able to work on some of the code hot-spots [2] and reduce it from ~50-60% CPU down to 5-9% CPU utilisation on my laptop, so it's definitely more machine friendly now. In addition I've added the following small features:

Version 0.01.08 should be hitting the Ubuntu 16.04 Xenial Xerus archive in the next 24 hours or so. I have also the lastest version in my PPA (ppa:colin-king/pagemon) built for Trusty, Vivid, Wily and Xenial.

Pagemon is useful for spotting unexpected memory activity and it is just interesting watching the behaviour memory hungry processes such as web-browsers and Virtual Machines.

Notes:

[1] Mainly very late at night when I can't sleep (but that's another story...). The git log says it all.

[2] Reading in /proc/$PID/maps and efficiently reading per page data from /proc/$PID/pagemap

With the use of tools such as valgrind's cachegrind and perf I was able to work on some of the code hot-spots [2] and reduce it from ~50-60% CPU down to 5-9% CPU utilisation on my laptop, so it's definitely more machine friendly now. In addition I've added the following small features:

- Now one can specify the name of a process to monitor as well as the PID. This also allows one to run pagemon on itself(!), which is a bit meta.

- Perf events showing Page Faults and Kernel Page Allocates and Frees, toggled on/off with the 'p' key.

- Improved and snappier clean up and exit when a monitored process exits.

- Far more efficient page map reading and rendering.

- Out of Memory (OOM) scores added to VM statistics window.

- Process activity (busy, sleeping, etc) to VM statistics window.

- Zoom mode min/max with '[' (min) and ']' (max) keys.

- Close pop-up windows with key 'c'.

- Improved handling of rapid map expansion and shrinking.

- Jump to end of map using 'End' key.

- Improve the man page.

Version 0.01.08 should be hitting the Ubuntu 16.04 Xenial Xerus archive in the next 24 hours or so. I have also the lastest version in my PPA (ppa:colin-king/pagemon) built for Trusty, Vivid, Wily and Xenial.

Pagemon is useful for spotting unexpected memory activity and it is just interesting watching the behaviour memory hungry processes such as web-browsers and Virtual Machines.

Notes:

[1] Mainly very late at night when I can't sleep (but that's another story...). The git log says it all.

[2] Reading in /proc/$PID/maps and efficiently reading per page data from /proc/$PID/pagemap

Saturday, 17 October 2015

combining RAPL and perf to do power calibration

A useful feature on modern x86 CPUs is the Running Average Power Limit (RAPL) that allows one to monitor System on Chip (SoC) power consumption. Combine this data with the ability to accurately measure CPU cycles and instructions via perf and we can get some way to get a rough estimate energy consumed to perform a single operation on the CPU.

power-calibrate is a simple tool that hacked up to perform some synthetic loading of the processor, gather the RAPL and CPU stats and using simple linear regression to compute some power related metrics.

In the example below, I run power-calibrate on an Intel i5-3210M (2 Cores, 4 threads) with each test run taking 10 seconds (-r 10), using the RAPL interface to measure power and gathering 11 samples on CPU threads 1..4:

The results at the end are estimates based on the gathered samples. The samples are compared to the computed linear regression coefficients using the coefficient of determination (R^2); a value of 1 is a perfect linear fit, less than 1 a poorer fit.

For more accurate results, increase the run time (-r option) and also increase the number of samples (-s option).

Power-calibrate is available in Ubuntu Wily 15.10. It is just an academic toy for getting some power estimates and may be useful to compare compute vs power metrics across different x86 CPUs. I've not been able to verify how accurate it really is, so I am interested to see how this works across a range of systems.

power-calibrate is a simple tool that hacked up to perform some synthetic loading of the processor, gather the RAPL and CPU stats and using simple linear regression to compute some power related metrics.

In the example below, I run power-calibrate on an Intel i5-3210M (2 Cores, 4 threads) with each test run taking 10 seconds (-r 10), using the RAPL interface to measure power and gathering 11 samples on CPU threads 1..4:

power-calibrate -r 10 -R -s 11

CPU load User Sys Idle Run Ctxt/s IRQ/s Ops/s Cycl/s Inst/s Watts

0% x 1 0.1 0.1 99.8 1.0 181.6 61.1 0.0 2.5K 380.2 2.485

0% x 2 0.0 1.0 98.9 1.2 161.8 63.8 0.0 5.7K 0.8K 2.366

0% x 3 0.1 1.3 98.5 1.1 204.2 75.2 0.0 7.6K 1.9K 2.518

0% x 4 0.1 0.1 99.9 1.0 124.7 44.9 0.0 11.4K 2.7K 2.167

10% x 1 2.4 0.2 97.4 1.5 203.8 104.9 21.3M 123.1M 297.8M 2.636

10% x 2 5.1 0.0 94.9 1.3 185.0 137.1 42.0M 243.0M 0.6B 2.754

10% x 3 7.5 0.2 92.3 1.2 275.3 190.3 58.1M 386.9M 0.8B 3.058

10% x 4 10.0 0.1 89.9 1.9 213.5 206.1 64.5M 486.1M 0.9B 2.826

20% x 1 5.0 0.1 94.9 1.0 288.8 170.0 69.6M 403.0M 1.0B 3.283

20% x 2 10.0 0.1 89.9 1.6 310.2 248.7 96.4M 0.8B 1.3B 3.248

20% x 3 14.6 0.4 85.0 1.7 640.8 450.4 238.9M 1.7B 3.3B 5.234

20% x 4 20.0 0.2 79.8 2.1 633.4 514.6 270.5M 2.1B 3.8B 4.736

30% x 1 7.5 0.2 92.3 1.4 444.3 278.7 149.9M 0.9B 2.1B 4.631

30% x 2 14.8 1.2 84.0 1.2 541.5 418.1 200.4M 1.7B 2.8B 4.617

30% x 3 22.6 1.5 75.9 2.2 960.9 694.3 365.8M 2.6B 5.1B 7.080

30% x 4 30.0 0.2 69.8 2.4 959.2 774.8 421.1M 3.4B 5.9B 5.940

40% x 1 9.7 0.3 90.0 1.7 551.6 356.8 201.6M 1.2B 2.8B 5.498

40% x 2 19.9 0.3 79.8 1.4 668.0 539.4 288.0M 2.4B 4.0B 5.604

40% x 3 29.8 0.5 69.7 1.8 1124.5 851.8 481.4M 3.5B 6.7B 7.918

40% x 4 40.3 0.5 59.2 2.3 1186.4 1006.7 0.6B 4.6B 7.7B 6.982

50% x 1 12.1 0.4 87.4 1.7 536.4 378.6 193.1M 1.1B 2.7B 4.793

50% x 2 24.4 0.4 75.2 2.2 816.2 668.2 362.6M 3.0B 5.1B 6.493

50% x 3 35.8 0.5 63.7 3.1 1300.2 1004.6 0.6B 4.2B 8.2B 8.800

50% x 4 49.4 0.7 49.9 3.8 1455.2 1240.0 0.7B 5.7B 9.6B 8.130

60% x 1 14.5 0.4 85.1 1.8 735.0 502.7 295.7M 1.7B 4.1B 6.927

60% x 2 29.4 1.3 69.4 2.0 917.5 759.4 397.2M 3.3B 5.6B 6.791

60% x 3 44.1 1.7 54.2 3.1 1615.4 1243.6 0.7B 5.1B 9.9B 10.056

60% x 4 58.5 0.7 40.8 4.0 1728.1 1456.6 0.8B 6.8B 11.5B 9.226

70% x 1 16.8 0.3 82.9 1.9 841.8 579.5 349.3M 2.0B 4.9B 7.856

70% x 2 34.1 0.8 65.0 2.8 966.0 845.2 439.4M 3.7B 6.2B 6.800

70% x 3 49.7 0.5 49.8 3.5 1834.5 1401.2 0.8B 5.9B 11.8B 11.113

70% x 4 68.1 0.6 31.4 4.7 1771.3 1572.3 0.8B 7.0B 11.8B 8.809

80% x 1 18.9 0.4 80.7 1.9 871.9 613.0 357.1M 2.1B 5.0B 7.276

80% x 2 38.6 0.3 61.0 2.8 1268.6 1029.0 0.6B 4.8B 8.2B 9.253

80% x 3 58.8 0.3 40.8 3.5 2061.7 1623.3 1.0B 6.8B 13.6B 11.967

80% x 4 78.6 0.5 20.9 4.0 2356.3 1983.7 1.1B 9.0B 16.0B 12.047

90% x 1 21.8 0.3 78.0 2.0 1054.5 737.9 459.3M 2.6B 6.4B 9.613

90% x 2 44.2 1.2 54.7 2.7 1439.5 1174.7 0.7B 5.4B 9.2B 10.001

90% x 3 66.2 1.4 32.4 3.9 2326.2 1822.3 1.1B 7.6B 15.0B 12.579

90% x 4 88.5 0.2 11.4 4.8 2627.8 2219.1 1.3B 10.2B 17.8B 12.832

100% x 1 25.1 0.0 74.8 2.0 135.8 314.0 0.5B 3.1B 7.5B 10.278

100% x 2 50.0 0.0 50.0 3.0 91.9 560.4 0.7B 6.2B 10.4B 10.470

100% x 3 75.1 0.1 24.8 4.0 120.2 824.1 1.2B 8.7B 16.8B 13.028

100% x 4 100.0 0.0 0.0 5.0 76.8 1054.8 1.4B 11.6B 19.5B 13.156

For 4 CPUs (of a 4 CPU system):

Power (Watts) = (% CPU load * 1.176217e-01) + 3.461561

1% CPU load is about 117.62 mW

Coefficient of determination R^2 = 0.809961 (good)

Energy (Watt-seconds) = (bogo op * 8.465141e-09) + 3.201355

1 bogo op is about 8.47 nWs

Coefficient of determination R^2 = 0.911274 (strong)

Energy (Watt-seconds) = (CPU cycle * 1.026249e-09) + 3.542463

1 CPU cycle is about 1.03 nWs

Coefficient of determination R^2 = 0.841894 (good)

Energy (Watt-seconds) = (CPU instruction * 6.044204e-10) + 3.201433

1 CPU instruction is about 0.60 nWs

Coefficient of determination R^2 = 0.911272 (strong)

The results at the end are estimates based on the gathered samples. The samples are compared to the computed linear regression coefficients using the coefficient of determination (R^2); a value of 1 is a perfect linear fit, less than 1 a poorer fit.

For more accurate results, increase the run time (-r option) and also increase the number of samples (-s option).

Power-calibrate is available in Ubuntu Wily 15.10. It is just an academic toy for getting some power estimates and may be useful to compare compute vs power metrics across different x86 CPUs. I've not been able to verify how accurate it really is, so I am interested to see how this works across a range of systems.

Friday, 18 September 2015

NumaTop: A NUMA system monitoring tool

NumaTop is a useful tool developed by Intel for monitoring runtime memory locality and analysis of processes on Non-Uniform Memory Access (NUMA) systems. NumaTop can identify potential NUMA related performance bottlenecks and hence help one to re-balance memory/CPU allocations to maximise the potential of a NUMA system.

One can select specific processes and drill down and characteristics such as memory latencies or call chains to see where code is hot.

The tool uses perf to collect deeper system statistics and hence needs to be run with root privileges will only run on NUMA systems. I've recently packaged NumaTop and it is now available in Ubuntu Wily 15.10 and the source is available on github.

|

| Initial "Top" like process view |

One can select specific processes and drill down and characteristics such as memory latencies or call chains to see where code is hot.

|

| Observing a specific process.. |

|

| ..and observing memory latencies |

|

| Observing per Node CPU and memory statistics |

Monday, 14 September 2015

light-weight process stats with cpustat

A while ago I was working on identifying busy processes on small Ubuntu devices and required a tool that could look at per process stats (from /proc/$pid/stat) in a fast and efficient way with minimal overhead. There are plenty of tools such as "top" and "atop" that can show per-process CPU utilisation stats, but most of these aren't useful on really slow low-power devices as they consume several tens of megacycles collecting and displaying the results.

I developed cpustat to be compact and efficient, as well as provide enough stats to allow me to easily identify CPU sucking processes. To optimise the code, I used tools such as perf to identify code hotspots as well as valgrind's cachegrind to identify poorly designed cache inefficient data structures.

The majority of the savings were in the parsing of data from /proc - originally I used simple fscanf() style parsing; over several optimisation rounds I ended up with hand-crafted numeric and string scanning parsing that saved several hundred thousand cycles per iteration.

I also made some optimisations by tweaking the hash table sizes to match the input data more appropriately. Also, by careful re-use of heap allocations, I was able to reduce malloc()/free() calls and save some heap management overhead.

Some very frequent string look-ups were replaced with hash lookups and frequently accessed data was duplicated rather than referenced indirectly to keep data local to reduce cache stalls and hence speed up data comparison lookup time.

The source has been statically checked by CoverityScan, cppcheck and also clang's scan-build to check for bugs introduced in the optimisation steps.

cpustat is now available in Ubuntu 15.10 Wily Werewolf. Visit the cpustat project page for more details.

I developed cpustat to be compact and efficient, as well as provide enough stats to allow me to easily identify CPU sucking processes. To optimise the code, I used tools such as perf to identify code hotspots as well as valgrind's cachegrind to identify poorly designed cache inefficient data structures.

The majority of the savings were in the parsing of data from /proc - originally I used simple fscanf() style parsing; over several optimisation rounds I ended up with hand-crafted numeric and string scanning parsing that saved several hundred thousand cycles per iteration.

I also made some optimisations by tweaking the hash table sizes to match the input data more appropriately. Also, by careful re-use of heap allocations, I was able to reduce malloc()/free() calls and save some heap management overhead.

Some very frequent string look-ups were replaced with hash lookups and frequently accessed data was duplicated rather than referenced indirectly to keep data local to reduce cache stalls and hence speed up data comparison lookup time.

The source has been statically checked by CoverityScan, cppcheck and also clang's scan-build to check for bugs introduced in the optimisation steps.

|

| Example of cpustat |

Tuesday, 8 September 2015

static code analysis (revisited)

A while ago I was extolling the virtues of static analysis tools such as cppcheck, smatch and CoverityScan for C and C++ projects. I've recently added to this armoury the clang analyser scan-build, which has been most helpful in finding even more obscure bugs that the previous three did not catch.

Using scan-build is very simple indeed, install clang and then in your source tree just build your project with scan-build, e.g. for a project built by make, use:

..and running scan-view will show the issues found. For an example of the kind of results scan-build can find, I ran it against a systemd build (head commit 4df0514d299e349ce1d0649209155b9e83a23539).

As one can see, scan-build is a powerful and easy to use open-source static analyser. I heartily recommend using it on every C and C++ project.

Using scan-build is very simple indeed, install clang and then in your source tree just build your project with scan-build, e.g. for a project built by make, use:

scan-build make..and at the end of a build one will see a summary message:

scan-build make scan-build: 366 bugs found. scan-build: Run 'scan-view /tmp/scan-build-2015-09-08-094505-16657-1' to examine bug reports. scan-build: The analyzer encountered problems on some source files. scan-build: Preprocessed versions of these sources were deposited in '/tmp/scan-build-2015-09-08-094505-16657-1/failures'. scan-build: Please consider submitting a bug report using these files: scan-build: http://clang-analyzer.llvm.org/filing_bugs.html

..and running scan-view will show the issues found. For an example of the kind of results scan-build can find, I ran it against a systemd build (head commit 4df0514d299e349ce1d0649209155b9e83a23539).

As one can see, scan-build is a powerful and easy to use open-source static analyser. I heartily recommend using it on every C and C++ project.

Monday, 7 September 2015

Monitoring temperatures with psensor

While doing some thermal debugging this weekend I stumbled upon the rather useful temperature monitoring utility "Psensor". I configured it to update stats every second and according to perf it was only using 0.02 CPU's worth of compute, so it seems relatively lightweight and shouldn't contribute to warming the machine up!

I like the min/max values being clearly shown and also the ability to change graph colours and toggle data on or off. Quick, easy and effective. Not sure why I haven't found this tool earlier, but I wish I had!

I like the min/max values being clearly shown and also the ability to change graph colours and toggle data on or off. Quick, easy and effective. Not sure why I haven't found this tool earlier, but I wish I had!

Saturday, 29 August 2015

Identifying Suspend/Resume delays

The Intel SuspendResume project aims to help identify delays in suspend and resume. After seeing it demonstrated by Len Brown (Intel) at this years Linux Plumbers conference I gave it a quick spin and was delighted to see how easy it is to use.

The project has some excellent "getting started" documentation describing how to configure a system and run the suspend resume analysis script which should be read before diving in too deep.

For the impatient, one can do try it out using the following:

..and manually resume once after the machine has completed a successful suspend.

This will create a directory containing dumps of the kernel log and ftrace output as well as an html web page that one can read into your favourite web browser to view the results. One can zoom in/out of the web page to drill down and see where the delays are occurring, an example from the SuspendResume project page is shown below:

It is a useful project, kudos to Intel for producing it. I thoroughly recommend using it to identify the delays in suspend/resume.

The project has some excellent "getting started" documentation describing how to configure a system and run the suspend resume analysis script which should be read before diving in too deep.

For the impatient, one can do try it out using the following:

git clone https://github.com/01org/suspendresume.git

cd suspendresume

sudo ./analyze_suspend.py..and manually resume once after the machine has completed a successful suspend.

This will create a directory containing dumps of the kernel log and ftrace output as well as an html web page that one can read into your favourite web browser to view the results. One can zoom in/out of the web page to drill down and see where the delays are occurring, an example from the SuspendResume project page is shown below:

|

| example webpage (from https://01.org/suspendresume) |

It is a useful project, kudos to Intel for producing it. I thoroughly recommend using it to identify the delays in suspend/resume.

Friday, 7 August 2015

More ACPI table tests in fwts 15.08.00

The Canonical Hardware Enablement Team and myself are continuing the work to add more ACPI table tests to the Firmware Test Suite (fwts). The latest 15.08.00 release added sanity checks for the following tables:

The Canonical Hardware Enablement Team and myself are continuing the work to add more ACPI table tests to the Firmware Test Suite (fwts). The latest 15.08.00 release added sanity checks for the following tables:- ASF! (Alert Standard Format)

- FPDT (Firmware Performance Data Table)

- IORT (IO Remapping Table)

- MCHI (Management Controller Host Interface)

- STAO (Status Override Table)

- TPM2 (Trusted Platform Module 2)

- WDAT (Microsoft Hardware Watchdog Action Table)

Our aim is to continue to add support for existing and new ACPI tables to make fwts a comprehensive firmware test tool. For more information about fwts, please refer to the fwts jump start wiki page.

Subscribe to:

Posts (Atom)